Piping is probably one of the most underutilized feature of Powershell that I’ve seen in the wild. Supporting pipes in Powershell allows you to write code that is much more expressive than simple imperative programming. However, most Powershell documentation does not do a good job of demonstrating how to think about pipable functions. In this tutorial, we will start with functions written the “standard” way and convert them step-by-step to support pipes.

Here’s a simple rule of thumb: if you find yourself writing a foreach loop in Powershell with more than just a line or two in the body, you might be doing something wrong.

Consider the following output from a function called Get-Team:

Name Value

---- -----

Chris Manager

Phillip Service Engineer

Andy Service Engineer

Neil Service Engineer

Kevin Service Engineer

Rick Software Engineer

Mark Software Engineer

Miguel Software Engineer

Stewart Software Engineer

Ophelia Software Engineer

Let’s say I want to output the name and title. I might write the Powershell as follows:

$data = Get-Team

foreach($item in $data) {

write-host "Name: $($item.Name); Title: $($item.Value)"

}

I could also use the Powershell ForEach-Object function to do this instead of the foreach block.

# % is a short-cut to ForEach-Object

Get-Team | %{

write-host "Name: $($_.Name); Title: $($_.Value)"

}

This is pretty clean given that the foreach block is only one line. I’m going to ask you to use your imagination and pretend that our logic is more complex than that. In a situation like that I would prefer to write something that looks more like the following:

Get-Team | Format-TeamMember

But how do you write a function like Format-TeamMember that can participate in the Piping behavior of Powershell? There is documenation about this, but it is often far from the introductory documentation and thus I have rarely seen it used by engineers in their day to day scripting in the real world.

The Naive Solution

Let’s start with the naive solution and evolve the function toward something more elegant.

Function Format-TeamMember() {

param([Parameter(Mandatory)] [array] $data)

$data | %{

write-host "Name: $($_.Name); Title: $($_.Value)"

}

}

# Usage

$data = Get-Team

Format-TeamMember -Data $Data

At this point the function is just a wrapper around the foreach loop from above and thus adds very little value beyond isolating the foreach logic.

Let me draw your attention to the $data parameter. It’s defined as an array which is good since we’re going to pipe the array to a foreach block. The first step toward supporting pipes in Powershell functions is to convert list parameters into their singular form.

Convert to Singular

Function Format-TeamMember() {

param([Parameter(Mandatory)] $item)

write-host "Name: $($item.Name); Title: $($item.Value)"

}

# Usage

Get-Team | %{

Format-TeamMember -Item $_

}

Now that we’ve converted Format-TeamMember to work with single elements, we are ready to add support for piping.

Begin, Process, End

The powershell pipe functionality requires a little extra overhead to support. There are three blocks that must be defined in your function, and all of your executable code should be defined in one of those blocks.

Beginfires when the first element in the pipe is processed (when the pipe opens.) Use this block to initialize the function with data that can be cached over the lifetime of the pipe.Processfires once per element in the pipe.Endfires when the last element in the pipe is processed (or when the pipe closes.) Use this block to cleanup after the pipe executes.

Let’s add these blocks to Format-TeamMember.

Function Format-TeamMember() {

param([Parameter(Mandatory)] $item)

Begin {

write-host "Format-TeamMember: Begin" -ForegroundColor Green

}

Process {

write-host "Name: $($item.Name); Title: $($item.Value)"

}

End {

write-host "Format-TeamMember: End" -ForegroundColor Green

}

}

# Usage

Get-Team | Format-TeamMember

#Output

cmdlet Format-TeamMember at command pipeline position 2

Supply values for the following parameters:

item:

Oh noes! Now Powershell is asking for manual input! No worries–There’s one more thing we need to do to support pipes.

ValueFromPipeLine… ByPropertyName

If you want data to be piped from one function into the next, you have to tell the receiving function which parameters will be received from the pipeline. You do this by means of two attributes: ValueFromPipeline and ValueFromPipelineByPropertyName.

ValueFromPipeline

The ValueFromPipeline attribute tells the Powershell function that it will receive the whole value from the previous function in thie pipe.

Function Format-TeamMember() {

param([Parameter(Mandatory, ValueFromPipeline)] $item)

Begin {

write-host "Format-TeamMember: Begin" -ForegroundColor Green

}

Process {

write-host "Name: $($item.Name); Title: $($item.Value)"

}

End {

write-host "Format-TeamMember: End" -ForegroundColor Green

}

}

# Usage

Get-Team | Format-TeamMember

#Output

Format-TeamMember: Begin

Name: Chris; Title: Manager

Name: Phillip; Title: Service Engineer

Name: Andy; Title: Service Engineer

Name: Neil; Title: Service Engineer

Name: Kevin; Title: Service Engineer

Name: Rick; Title: Software Engineer

Name: Mark; Title: Software Engineer

Name: Miguel; Title: Software Engineer

Name: Stewart; Title: Software Engineer

Name: Ophelia; Title: Software Engineer

Format-TeamMember: End

ValueFromPipelineByPropertyName

This is great! We’ve really moved things forward! But we can do better.

Our Format-TeamMember function now requires knowledge of the schema of the data from the calling function. The function is not self-contained in a way to make it maintainable or usable in other contexts. Instead of piping the whole object into the function, let’s pipe the discrete values the function depends on instead.

Function Format-TeamMember() {

param(

[Parameter(Mandatory, ValueFromPipelineByPropertyName)] [string] $Name,

[Parameter(Mandatory, ValueFromPipelineByPropertyName)] [string] $Value

)

Begin {

write-host "Format-TeamMember: Begin" -ForegroundColor Green

}

Process {

write-host "Name: $Name; Title: $Value"

}

End {

write-host "Format-TeamMember: End" -ForegroundColor Green

}

}

# Usage

Get-Team | Format-TeamMember

# Output

Format-TeamMember: Begin

Name: Chris; Title: Manager

Name: Phillip; Title: Service Engineer

Name: Andy; Title: Service Engineer

Name: Neil; Title: Service Engineer

Name: Kevin; Title: Service Engineer

Name: Rick; Title: Software Engineer

Name: Mark; Title: Software Engineer

Name: Miguel; Title: Software Engineer

Name: Stewart; Title: Software Engineer

Name: Ophelia; Title: Software Engineer

Format-TeamMember: End

Alias

In our last refactoring, we set out to make Format-TeamMember self-contained. Our introduction of the Name and Value parameters decouple us from having to know the schema of the previous object in the pipeline–almost. We had to name our parameter Value which is not really how Format-TeamMember thinks of that value. It thinks of it as the Title–but in the context of our contrived module, Value is sometimes another name that is used. In Powershell, you can use the Alias attribute to support multiple names for the same parameter.

Function Format-TeamMember() {

param(

[Parameter(Mandatory, ValueFromPipelineByPropertyName)] [string] $Name,

[Alias("Value")]

[Parameter(Mandatory, ValueFromPipelineByPropertyName)] [string] $Title # Change the name to Title

)

Begin {

write-host "Format-TeamMember: Begin" -ForegroundColor Green

}

Process {

write-host "Name: $Name; Title: $Title" # Use the newly renamed parameter

}

End {

write-host "Format-TeamMember: End" -ForegroundColor Green

}

}

# Usage

Get-Team | Format-TeamMember

# Output

Format-TeamMember: Begin

Name: Chris; Title: Manager

Name: Phillip; Title: Service Engineer

Name: Andy; Title: Service Engineer

Name: Neil; Title: Service Engineer

Name: Kevin; Title: Service Engineer

Name: Rick; Title: Software Engineer

Name: Mark; Title: Software Engineer

Name: Miguel; Title: Software Engineer

Name: Stewart; Title: Software Engineer

Name: Ophelia; Title: Software Engineer

Format-TeamMember: End

Pipe Forwarding

Our Format-TeamMember function now supports receiving data from the pipe, but it does not return any information that can be forwarded to the next function in the pipeline. We can change that by returning the formatted line instead of calling Write-Host.

Function Format-TeamMember() {

param(

[Parameter(Mandatory, ValueFromPipelineByPropertyName)] [string] $Name,

[Alias("Value")]

[Parameter(Mandatory, ValueFromPipelineByPropertyName)] [string] $Title # Change the name to Title

)

Begin {

# Do one-time operations needed to support the pipe here

}

Process {

return "Name: $Name; Title: $Title" # Use the newly renamed parameter

}

End {

# Cleanup before the pipe closes here

}

}

# Usage

[array] $output = Get-Team | Format-TeamMember

write-host "The output contains $($output.Length) items:"

$output | Out-Host

# Output

The output contains 10 items:

Name: Chris; Title: Manager

Name: Phillip; Title: Service Engineer

Name: Andy; Title: Service Engineer

Name: Neil; Title: Service Engineer

Name: Kevin; Title: Service Engineer

Name: Rick; Title: Software Engineer

Name: Mark; Title: Software Engineer

Name: Miguel; Title: Software Engineer

Name: Stewart; Title: Software Engineer

Name: Ophelia; Title: Software Engineer

Filtering

This is a lot of information. What if we wanted to filter the data so that we only see the people with the title “Service Engineer?” Let’s implement a function that filters data out of the pipe.

function Find-Role(){

param(

[Parameter(Mandatory, ValueFromPipeline)] $item,

[switch] $ServiceEngineer

)

Begin {

}

Process {

if ($ServiceEngineer) {

if ($item.Value -eq "Service Engineer") {

return $item

}

}

if (-not $ServiceEngineer) {

# if no filter is requested then return everything.

return $item

}

return; # not technically required but shows the exit when nothing an item is filtered out.

}

End {

}

}

This should be self-explanatory for the most part. Let me draw your attention though to the return; statement that isn’t technically required. A mistake I’ve seen made in this scenario is to return $null. If you return $null it adds $null to the pipeline as it if were a return value. If you want to exclude an item from being forwarded through the pipe you must not return anything. While the return; statement is not syntactically required by the language, I find it helpful to communicate my intention that I am deliberately not adding an element to the pipe.

Now let’s look at usage:

Get-Team | Find-Role | Format-Data # No Filter

Name: Chris; Title: Manager

Name: Phillip; Title: Service Engineer

Name: Andy; Title: Service Engineer

Name: Neil; Title: Service Engineer

Name: Kevin; Title: Service Engineer

Name: Rick; Title: Software Engineer

Name: Mark; Title: Software Engineer

Name: Miguel; Title: Software Engineer

Name: Stewart; Title: Software Engineer

Name: Ophelia; Title: Software Engineer

Get-Team | Find-Role -ServiceEngineer | Format-TeamMember # Filtered

Name: Phillip; Title: Service Engineer

Name: Andy; Title: Service Engineer

Name: Neil; Title: Service Engineer

Name: Kevin; Title: Service Engineer

Summary

Notice how clean the function composition is: Get-Team | Find-Role -ServiceEngineer | Format-TeamMember!

Pipable functions are a powerful language feature of Powershell <rimshots/>. Writing pipable functions allows you to compose logic in a way that is more expressive than simple imperative scripting. I hope this tutorial demonstrated to you how to modify existing Powershell functions to support pipes.

There doesn’t seem to be much guidance as to the internal structure of a Powershell module. There’s a lot of “you can do it this way or that way” guidance, but little “this has worked well for me and that hasn’t.” As a patterns and practices guy, I’m dissatisfied with this state of affairs. In this post I will describe the module structure I use and the reasons it works well for me.

I’ve captured the structure in a sample module for you to reference.

Posh.psd1

This is a powershell module manifest. It contains the metadata about the powershell module, including the name, version, unique id, dependencies, etc..

It’s very important that the Module id is unique as re-using a GUID from one module to another will potentially create conflicts on an end-user’s machine.

I don’t normally use a lot of options in the manifest, but having the manifest in place at the beginning makes it easier to expand as you need new options. Here is my default psd1 implementation:

# Version number of this module.

ModuleVersion = '1.0'

# Supported PSEditions

# CompatiblePSEditions = @()

# ID used to uniquely identify this module

GUID = '2a97124e-d73e-49ad-acd7-1ea5b3dba0ba'

# Author of this module

Author = 'chmckenz'

# Company or vendor of this module

CompanyName = 'ISG Inc'

# Copyright statement for this module

Copyright = '(c) 2018 chmckenz. All rights reserved.'

ModuleToProcess = "Posh.psm1"

Posh.psm1

This is the module file that contains or loads your functions. While it is possible to write all your module functions in one file, I prefer to separate each function into its own file.

My psm1 file is fairly simple.

gci *.ps1 -path export,private -Recurse | %{

. $_.FullName

}

gci *.ps1 -path export -Recurse | %{

Export-ModuleMember $_.BaseName

}

The first gci block loads all of the functions in the Export and Private directories. The -Recurse argument allows me to group functions into subdirectories as appropriate in larger modules.

The second gci block exports only the functions in the Export directory. Notice the use of the -Recurse argument again.

With this structure, my psd1 & psd1 files do not have to change as I add new functions.

Export Functions

I keep functions I want the module to export in this directory. This makes them easy to identify and to export from the .psm1 file.

It is important to distinguish functions you wish to expose to clients from private functions for the same reason you wouldn’t make every class & function public in a nuget package. A Module is a library of functionality. If you expose its internals then clients will become dependent on those internals making it more difficult to modify your implementation.

You should think of public functions like you would an API. It’s shape should be treated as immutable as much as possible.

Private Functions

I keep helper functions I do not wish to expose to module clients here. This makes it easy to exclude them from the calls to Export-ModuleMember in the .psm1 file.

Tests

The Tests directory contains all of my Pester tests. Until a few years ago I didn’t know you could write tests for Powershell. I discovered Pester and assigned a couple of my interns to figure out how to use it. They did and they taught me. Now I can practice TDD with Powershell–and so can you.

Other potential folders

When publishing my modules via PowershellGallery or Chocolatey I have found it necessary to add additional folders & scripts to support the packaging & deployment of the module. I will follow-up with demos of how to do that in a later post.

Summary

I’ve put a lot of thought into how I structure my Powershell modules. These are my “best practices,” but in a world where Powershell best practices are rarely discussed your mileage may vary. Consider this post an attempt to start a conversation.

There is a shorthand syntax that can be applied to arrays to apply filtering. Consider the following syntactically correct Powershell:

1,2,3,4,5 | ?{ $_ -gt 2 } # => 3,4,5

You can write the same thing in a much simpler fashion as follows:

1,2,3,4,5 -gt 2 => 3,4,5

In the second example, Powershell is applying the expression -gt 2 to the elements of array and returning the matching items.

Null Coalesce

Unfortnately, Powershell lacks a true null coalesce operator. Fortunately, we can simulate that behavior using array comparisons.

($null, $null, 5,6, $null, 7).Length # => 6

($null, $null, 5,6, $null, 7 -ne $null).Length # => 3

($null, $null, 5,6, $null, 7 -ne $null)[0] # => 5

Destructuring

What is destructuring?

Destructuring is a convenient way of extracting multiple values from data stored in (possibly nested) objects and Arrays. It can be used in locations that receive data (such as the left-hand side of an assignment).

Here is an example of destructuring in powershell.

$first, $second, $therest = 1,2,3,4,5

$first

1

$second

2

$therest

3

4

5

As you can see, Powershell assigns the first and second values in the array to the variables $first and $second. The remaining items are then assigned to the last variable in the assignment list.

Gotchas

If we look at the following Powershell code nothing seems out of the ordinary.

$arr = @(1)

$arr.GetType().FullName

System.Object[]

However, look at this code sample:

# When Function Returns No Elements

Function Get-Array() {

return @()

}

$arr = Get-Array

$arr.GetType()

You cannot call a method on a null-valued expression.

At line:1 char:1

+ $arr.GetType()

+ ~~~~~~~~~~~~~~

+ CategoryInfo : InvalidOperation: (:) [], RuntimeException

+ FullyQualifiedErrorId : InvokeMethodOnNull

$arr -eq $null

True

# When Function Returns One Element

Function Get-Array() {

return @(1)

}

$arr = Get-Array

$arr.GetType().FullName

System.Int32

# When Function Returns Multiple Elements

Function Get-Array() {

return @(1,2)

}

$arr = Get-Array

$arr.GetType().FullName

System.Object[]

When returning arrays from functions, if the array contains only a single element, the default Powershell behavior is to destructure it. This can sometimes lead to confusing results.

You can override this behavior by prepending the resultant array with a ‘,’ which tells Powershell that the return type should not be destructured:

# When Function Returns No Elements

Function Get-Array() {

return ,@()

}

$arr = Get-Array

$arr.GetType().FullName

System.Object[]

# When Function Returns One Element

Function Get-Array() {

return ,@(1)

}

$arr = Get-Array

$arr.GetType().FullName

System.Object[]

# When Function Returns Multiple Elements

Function Get-Array() {

return ,@(1,2)

}

$arr = Get-Array

$arr.GetType().FullName

System.Object[]

As part of our Octopus Deploy migration effort we are writing a powershell module that we use to automatically bootstrap the Tentacle installation into Octopus. This involves maintaining metadata about machines and environments outside of Octopus. The reason we need this capability is to adhere to the “cattle vs. pets” approach to hardware. We want to be able to destroy and recreate our machines at will and have them show up again in Octopus ready to receive deployments.

Our initial implementation cycled through one machine at a time, installing Tentacle, registering it with Octopus (with the same security certificate so that Octopus recognizes it as the same machine), then moving on to the next machine. This is fine for small environments with few machines, but not awesome for larger environments with many machines. If it takes 2m to install Tentacle and I have 30 machines, I’m waiting an hour to be able to use the environment. With this problem in mind I decided to figure out how we could parallelize the boostrapping of machines in our Powershell module.

Start-Job

Start-Job is one of a family of Powershell functions created to support asynchrony. Other related functions are Get-Job, Wait-Job, Receive-Job, and Remove-Job. In it’s most basic form, Start-Job accepts a script block as a parameter and executes it on a background thread.

# executes "dir" on a background thread.

$job = Start-Job -ScriptBlock { dir }

The job object returned by Start-Job gives you useful information such as the job id, name, and current state. You can run Get-Job to get a list of running jobs, Wait-Job to wait on one or more jobs to complete, Receive-Job to get the output of each job, and Remove-Job to delist jobs in the current Powershell session.

Complexity

If that’s all there was to it, I wouldn’t be writing this blog post. I’d just tweet the link to the Start-Jobs msdn page and call it done. My scenario is that I need to bootstrap machines using code defined in my Powershell module, but run those commands in a background process. I also need to collate and log the output of those processes as well as report on the succes/failure of each job.

When you call Start-Job in Powershell it creates a new session in which currently loaded modules are not automatically loaded. If you have your powershell module in the $PsModulePath you’re probably okay. However, there is a difference between the version of the module I’m currently working on and testing vs. the one I have on my machine for normal use.

Start-Job has an additional parameter for a script block used to initialize the new Powershell session prior to executing your background process. The difficulty is that while you can pass arguments to the background process script block, you cannot pass arguments to the initialization script. Here’s how you make it all work.

Setup Code

<br /># Store the working module path in an environment variable so that the new powershell session can locate the correct version of the module.

# The environment variable will not persist beyond the current powershell session so we don't have to worry about poluting our machine state.

$env:OctobootModulePath = (get-module Octoboot).Path

$init = {

# When initializing the new session, use the -Force parameter in case a different version of the module is already loaded by a profile.

import-module $env:OctobootModulePath -Force

}

# create a parameterized script block

$scriptBlock = {

Param(

$computerName,

$environment,

$roles,

$userName,

$password,

$apiKey,

)

Install-Tentacle -computer $computerName `

-environment $environment `

-roles $roles `

-userName $userName -password $password `

-apiKey $apiKey

}

# I like to use an -Async switch on the controlling function. Debugging issues is easier in a synchronous context than in an async context. Making the async functionality optional is a win.

if ($async) {

$job = Start-Job `

-ScriptBlock $scriptBlock `

-InitializationScript $init `

-Name "Install Tentacle on $($computerName)" `

-ArgumentList @(

$computerName, $environment, $roles

$userName, $password,

$apiKey) -Debug:$debug

} else {

Install-Tentacle -computer $computerName `

-environment $environment `

-roles $roles `

-userName $userName -password $password `

-apiKey $apiKey

}

The above code is in a loop in the controlling powershell function. After I’ve kicked off all of the jobs I’m going to execute, I just need to wait on them to finish and collect their results.

Finalization Code

<br />if ($async) {

$jobs = get-job

$jobs | Wait-Job | Receive-Job

$jobs | foreach {

$job = $_

write-host "$($job.Id) - $($job.Name) - $($job.State)"

}

$jobs | remove-job

}

Since each individual job is now running in parallel, bootstrapping large environments doesn’t take much longer than bootstrapping smaller ones. The end result is that hour is now reduced to a few minutes.

Vim & Vundle

I finally bit the bullet and learned to use Vim competently. One of the things I was really impressed by in the Vim space is a plugin called Vundle. Vundle is a package manager for Vim plugins. At first, swimming in a sea of package managers, I was loathe to learn another one–but Vundle is extremely simple. It uses github as a package repository. “Installing” a package is basically as simple as running a git clone to the right location. Updating the package is a git pull. Security is managed by github. Genius.

Powershell

As a developer on Windows I find Powershell to be an extremely useful tool, especially when running in an excellent terminal emulator such as ConEmu. One of the problems that Powershell has is that there is no good way to install modules. The closest thing is PsGet.

What’s wrong with PsGet?

Nothing. PsGet is great. However, not every powershell module can be made public, and not every powershell module developer goes through the process of registering their modules at PsGet.

Introducing Psundle

I thought to myself, “Hell, if Vundle can install modules directly from github, I should be able to implement something similar in Powershell” and Psundle was born.

Psundle is a package manager for Powershell modules written in Powershell. It’s only dependency is that git is available in the PATH.

Disclaimer

Psundle is an alpha-quality product. It works, but API details may change. It will improve if you use it and submit your issues and/or Pull Requests through github.

Installation

You can install Psundle by running the following script in powershell:

iex ((new-object net.webclient).DownloadString('https://raw.githubusercontent.com/crmckenzie/psundle/master/tools/install.ps1'))

In your powershell profile, make sure you Import-Module Psundle. Your powershell profile is located at

"$home/Documents/WindowsPowershell/Profile.ps1"

I advise that you don’t just run some dude’s script you found on the internet. Review the script first (it’s easy to understand). Please, please, please report any installation errors with the self-installer.

Installing Powershell Modules With Psundle

Install-PsundleModule "owner" "repo"

For example, if you want to install the module I wrote for managing ruby versions on Windows, you would run:

Install-PsundleModule "crmckenzie" "psundle-ruby" http://github.com/crmckenzie/psundle-ruby

What does this accomplish?

As long as you have imported the Psundle module in your profile, Psundle will automatically load any modules it manages into your powershell session.

Other Features

Show-PsundleEnvironment

Executing Show-PsundleEnvironment gives output like this:

| Module | Path | Updates | HasUpdates |

|---|---|---|---|

| Psundle | C:\Users\Username\Documents\WindowsPowerShell\Modu… {dbed58a | Updating readme to resolve installation … | True |

| ChefDk | C:\Users\Username\Documents\WindowsPowerShell\Modu… | False | |

| Ruby | C:\Users\Username\Documents\WindowsPowerShell\Modu… | False | |

| VSCX | C:\Users\Username\Documents\WindowsPowerShell\Modu… | False |

Update-PsundleModule

I can update a module by running:

Update-PsundleModule "owner" "repo"

If I’m feeling brave, I can also Update-PsundleModules to update everything in one step.

Requirements For Installed Modules

Because Psundle ultimately just uses git clone to install powershell modules, Powershell modules in github need to be in the same structure that would be installed on disk.

Primarily, this means that the psm1 and psd1 files for the module should be in the repo root.

Committment to Develop

I’m making a blind committment to maintain this module through the end of 2016. “Maintenance” means I will answer issues and respond to pull requests for at least that length of time.

Whether I continue maintaining the module depends on whether or not people use it.

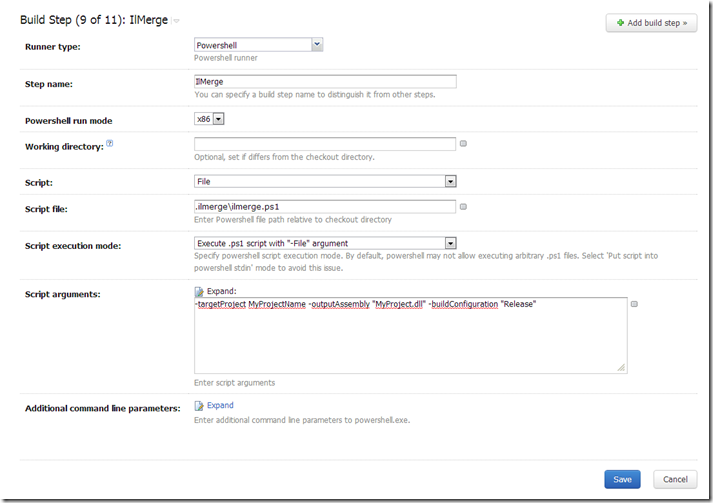

I wanted to IlMerge an assembly in a TeamCity project. TeamCity has a Powershell build step that you can use to run your own arbitrary scripts. Here’s how I did it

TeamCity Configuration

Powershell Script

Add this script to your powershell profile. If you don’t know where your powershell profile is, open a powershell session and type $profile and press <Enter>. In Windows 7, you can run powershell from the current folder by typing powershell in the address bar of windows explorer.

#Set environment variables for Visual Studio Command Prompt

$vspath = (get-childitem env:VS100COMNTOOLS).Value

$vsbatchfile = "vsvars32.bat";

$vsfullpath = [System.IO.Path]::Combine($vspath, $vsbatchfile);

#$_ shortcut represents arguments

pushd $vspath

cmd /c $vsfullpath + "&set" |

foreach {

if ($_ -match “=”) {

$v = $_.split(“=”);

set-item -force -path "ENV:\$($v[0])" -value "$($v[1])"

}

}

popd

write-host "Visual Studio 2010 Command Prompt variables set." -ForegroundColor Red

The benefits of using NuGet to manage your project dependencies should be well-known by now.

If not, read this.

There’s one area where NuGet is still somewhat deficient: Team Workflow.

Ideally, when you begin work on a new solution, you should follow these steps:

1) Get the latest version of the source code.

2) Run a command to install any dependent packages.

3) Build!

It’s step 2 in this process that is causing some trouble. NuGet does offer a command to install packages for a single project, but the developer is required to run this command for each project that has package references. It would be nice if NuGet would install all packages for all projects using packages/repositories.config, but at the time of this writing it will not. However, it is fairly easy to add a powershell function to the NuGet package manager console that will do this for you.

First, you should download the NuGet executable and add its directory to your PATH Environment variable. I placed mine in C:devutilsNuGet<version>. You’ll need to be able to access the executable from the command-line.

Second, you need to identify the expected location of the NuGet Powershell profile. You can do this be launching Visual Studio, opening the Package Manager Console, type $profile, then press enter. The console will show you the expected profile path. In Windows 7 it will probably be: “C:Users<user>DocumentsWindowsPowerShellNuGet_profile.ps1”

Next you need to create a file with that name in that directory.

Next, paste the following powershell code into the file:

function Install-NuGetPackagesForSolution()

{

write-host "Installing packages for the following projects:"

$projects = get-project -all

$projects

foreach($project in $projects)

{

Install-NuGetPackagesForProject($project)

}

}

function Install-NuGetPackagesForProject($project)

{

if ($project -eq $null)

{

write-host "Project is required."

return

}

$projectName = $project.ProjectName;

write-host "Checking packages for project $projectName"

write-host ""

$projectPath = $project.FullName

$projectPath

$dir = [System.IO.Path]::GetDirectoryName($projectPath)

$packagesConfigFileName = [System.IO.Path]::Combine($dir, "packages.config")

$hasPackagesConfig = [System.IO.File]::Exists($packagesConfigFileName)

if ($hasPackagesConfig -eq $true)

{

write-host "Installing packages for $projectName using $packagesConfigFileName."

nuget i $packagesConfigFileName -o ./packages

}

}

Restart Visual Studio 2010. After the package manager console loads, you should be able to run the Install-NuGetPackagesForSolution command. The command will iterate over each of your projects, check to see if the project contains a packages.config file, and if so run NuGet install against the packages.config.

You may also wish to do this from the PowerShell console outside of visual studio. If you are in the solution root directoy you can run the following two commands:

$files = gci -Filter packages.config -Recurse

$files | ForEach-Object {nuget i $_.FullName -o .packages}