System.Enum is a powerful type to use in the .NET Framework. It’s best used when the list of possible states are known and are defined by the system that uses them. Enumerations are not good in any situation that requires third party extensibility. While I love enumerations, they do present some problems.

First, the code that uses them often exists in the form of switch statements that get repeated about the code base. This violation of DRY is not good.

Second, if a new value is added to the enumeration, any code that relies on the enumeration must take the new value into account. While not technically a violation of the LSP, it’s close.

Thrid, Enums are limited in what they can express. They are basically a set of named integer constants treated as a separate type. Any other data or functionality you might wish them to have has to be added on through other objects. A common requirement is to present a human-readable version of the enum field to the user, which is most often accomplished through the use of the Description Attribute. A further problem is the fact that they are often serialized as integers, which means that the textual representation has to be reproduced in whatever database, reporting tool, or other system that consumes the serialized value.

Polymorphism is always an alternative to enumerations. Polymorphism brings a problem of its own—lack of discoverability. It would be nice if we could have the power of a polymorphic type coupled with the discoverability of an enumeration.

Fortunately, we can. It’s a variation on the Flyweight pattern. I don’t think it fits the technical definition of the Flyweight pattern because it has a different purpose. Flyweight is generally employed to minimize memory usage. We’re going to use some of the mechanics of the pattern employed to a different purpose.

Consider a simple domain model with two objects: Task, and TaskState. A Task has a Name, Description, and TaskState. A TaskState has a Name, Value, and boolean method that indicates if a task can move from one state to the other. The available states are Pending, InProgress, Completed, Deferred, and Cancelled.

If we implemented this polymorphically, we’d create an abstract class or interface to represent the TaskState. Then we’d provide the various property and method implementations for each subclass. We would have to search the type system to discover which subclasses are available to represent TaskStates.

Instead, let’s create a sealed TaskState class with a defined list of static TaskState instances and a private constructor. We seal the class because our system controls the available TaskStates. We privatize the constructor because we want clients of this class to be forced to use the pre-defined static instances. Finally, we initialize the static instances in a static constructor on the class. Here’s what the code looks like:

public sealed class TaskState { public static TaskState Pending { get; private set; } public static TaskState InProgress { get; private set; } public static TaskState Completed { get; private set;} public static TaskState Deferred { get; private set; } public static TaskState Canceled { get; private set; } public string Name { get; private set; } public string Value { get; private set; } private readonly List<TaskState> _transitions = new List<TaskState>(); private TaskState AddTransition(TaskState value) { this._transitions.Add(value); return this; } public bool CanTransitionTo(TaskState value) { return this._transitions.Contains(value); } private TaskState() { } static TaskState() { BuildStates(); ConfigureTransitions(); } private static void ConfigureTransitions() { Pending.AddTransition(InProgress).AddTransition(Canceled); InProgress.AddTransition(Completed).AddTransition(Deferred).AddTransition(Canceled); Deferred.AddTransition(InProgress).AddTransition(Canceled); } private static void BuildStates() { Pending = new TaskState() { Name = "Pending", Value = "Pending", }; InProgress = new TaskState() { Name = "In Progress", Value = "InProgress", }; Completed = new TaskState() { Name = "Completed", Value = "Completed", }; Deferred = new TaskState() { Name = "Deferred", Value = "Deferred", }; Canceled = new TaskState() { Name = "Canceled", Value = "Canceled", }; } }

This pattern allows us to consume the class as if it were an enumeration:

var task = new Task() { State = TaskState.Pending, }; if (task.State.CanTransitionTo(TaskState.Completed)) { // do something }

We still have the flexibility of polymorphic instances. I’ve used this pattern several times with great effect in my software. I hope it benefits you as much as it has me.

One of the points I tried to make in my talk about TDD yesterday is that TDD is more focused on the clarity and expressiveness of your code than on its actual implementation. I wanted to take a little time and expand on what I meant.

I used a Shopping Cart as an TDD sample. In the sample, the requirement is that as products are added to the shopping cart, the cart should contain a list or OrderDetails that are distinct by product sku. Here is the test I wrote for this case (this is commit #8 if you want to follow along):

[Test] public void Details_AfterAddingSameProductTwice_ShouldDefragDetails() { // Arrange: Declare any variables or set up any conditions // required by your test. var cart = new Lib.ShoppingCart(); var product = new Product() { Sku = "ABC", Description = "Test", Price = 1.99 }; const int firstQuantity = 5; const int secondQuantity = 3; // Act: Perform the activity under test. cart.AddToCart(product, firstQuantity); cart.AddToCart(product, secondQuantity); // Assert: Verify that the activity under test had the // expected results Assert.That(cart.Details.Count, Is.EqualTo(1)); var detail = cart.Details.Single(); var expectedQuantity = firstQuantity + secondQuantity; Assert.That(detail.Quantity, Is.EqualTo(expectedQuantity)); Assert.That(detail.Product, Is.SameAs(product)); }

The naive implementation of AddToCart is currently as follows:

public void AddToCart(Product product, int quantity) { this._details.Add(new OrderDetail() { Product = product, Quantity = quantity }); }

This implementation of AddToCart fails the test case since it does not account for adding the same product sku twice. In order to get to the “Green” step, I made these changes:

public void AddToCart(Product product, int quantity) { if (this.Details.Any(detail => detail.Product.Sku == product.Sku)) { this.Details.First(detail => detail.Product.Sku == product.Sku).Quantity += quantity; } else { this._details.Add(new OrderDetail() { Product = product, Quantity = quantity }); } }

At this point, the test passes, but I think the above implementation is kind of ugly. Having the code in this kind of ugly state is still a value though because now I know I have solved the problem correctly. Let’s start by using Extract Condition on the conditional expression.

public void AddToCart(Product product, int quantity) { var detail = this.Details.SingleOrDefault(d => d.Product.Sku == product.Sku); if (detail != null) { detail.Quantity += quantity; } else { this._details.Add(new OrderDetail() { Product = product, Quantity = quantity }); } }

The algorithm being used is becoming clearer.

- Determine if I have an OrderDetail matching the Product Sku.

- If I do, increment the quantity.

- If I do not, create a new OrderDetail matching the product sku and set it’s quantity.

It’s a pretty simple algorithm. Let’s do a little more refactoring. Let’s apply Extract Method to the lambda expression.

public void AddToCart(Product product, int quantity) { var detail = GetProductDetail(product); if (detail != null) { detail.Quantity += quantity; } else { this._details.Add(new OrderDetail() { Product = product, Quantity = quantity }); } } private OrderDetail GetProductDetail(Product product) { return this.Details.SingleOrDefault(d => d.Product.Sku == product.Sku); }

This reads still more clearly. This is also where I stopped in my talk. Note that it has not been necessary to make changes to the my test case because the changes I have made go to the private implementation of the class. I’d like to go a little further now and say that if I change the algorithm I can actually make this code even clearer. What if the algorithm was changed to:

- Find or Create an OrderDetail matching the product sku.

- Update the quantity.

In the first algorithm, I am taking different action with the quantity depending on whether or not the detail exists. In the new algorithm, I’m demoting the importance of whether the order detail already exists so that I can always take the same action with respect to the quantity. Here’s the naive implementation:

public void AddToCart(Product product, int quantity) { OrderDetail detail; if (this.Details.Any(d => d.Product.Sku == product.Sku)) { detail = this.Details.Single(d => d.Product.Sku == product.Sku); } else { detail = new OrderDetail() { Product = product }; this._details.Add(detail); } detail.Quantity += quantity; }

The naive implementation is a little clearer. Let’s apply some refactoring effort and see what happens.. Let’s apply Extract Method to the entire process of getting the order detail.

public void AddToCart(Product product, int quantity) { var detail = GetDetail(product); detail.Quantity += quantity; } private OrderDetail GetDetail(Product product) { OrderDetail detail; if (this.Details.Any(d => d.Product.Sku == product.Sku)) { detail = this.Details.Single(d => d.Product.Sku == product.Sku); } else { detail = new OrderDetail() { Product = product }; this._details.Add(detail); } return detail; }

This is starting to take shape. However, “GetDetail” does not really communicate that we may be creating a new detail instead of just returning an existing one. If we rename it to FindOrCreateOrderDetailForProduct, we may get that clarity.

public void AddToCart(Product product, int quantity) { var detail = FindOrCreateDetailForProduct(product); detail.Quantity += quantity; } private OrderDetail FindOrCreateDetailForProduct(Product product) { OrderDetail detail; if (this.Details.Any(d => d.Product.Sku == product.Sku)) { detail = this.Details.Single(d => d.Product.Sku == product.Sku); } else { detail = new OrderDetail() { Product = product }; this._details.Add(detail); } return detail; }

AddToCart() looks pretty good now. It’s easy to read, and each line communicates the intent of our code clearly. FindOrCreateDetailForProduct() on the other hand is less easy to read. I’m going to apply Extract Conditional to the if statement, and Extract Method to each side of the expression. Here is the result:

private OrderDetail FindOrCreateDetailForProduct(Product product) { var detail = HasProductDetail(product) ? FindDetailForProduct(product) : CreateDetailForProduct(product); return detail; } private OrderDetail CreateDetailForProduct(Product product) { var detail = new OrderDetail() { Product = product }; this._details.Add(detail); return detail; } private OrderDetail FindDetailForProduct(Product product) { var detail = this.Details.Single(d => d.Product.Sku == product.Sku); return detail; } private bool HasProductDetail(Product product) { return this.Details.Any(d => d.Product.Sku == product.Sku); }

Now I’ve noticed that HasProductDetail and FindDetailForProduct are only using the product sku. I’m going to change the signature of these methods to accept only the sku, and I’ll change the method names accordingly.

public void AddToCart(Product product, int quantity) { var detail = FindOrCreateDetailForProduct(product); detail.Quantity += quantity; } private OrderDetail FindOrCreateDetailForProduct(Product product) { var detail = HasDetailForProductSku(product.Sku) ? FindDetailByProductSku(product.Sku) : CreateDetailForProduct(product); return detail; } private OrderDetail CreateDetailForProduct(Product product) { var detail = new OrderDetail() { Product = product }; this._details.Add(detail); return detail; } private OrderDetail FindDetailByProductSku(string productSku) { var detail = this.Details.Single(d => d.Product.Sku == productSku); return detail; } private bool HasDetailForProductSku(string productSku) { return this.Details.Any(d => d.Product.Sku == productSku); }

At this point, the AddToCart() method has gone through some pretty extensive refactoring. The basic algorithm has been changed, and the implementation of the new algorithm has been changed a lot. Now let me point something out: At no time during any of these changes did our test fail, and at no time during these changes did our test fail to express the intended behavior of the class. We made changes to every aspect of the implementation: We changed the order of the steps in the algorithm. We constantly added and renamed methods until we had very discrete well-named functions that stated explicitly what the code is doing. The unit test remained a valid expression of intended behavior despite all of these changes. This is what it means to say that a test is more about API than implementation. The unit-test should not depend on the implementation, nor does it necessarily imply a particular implementation.

Happy Coding!

I saw Matthew Podwysocki speak on Reactive Extensions at the most recent DC Alt .NET meeting. I’ve heard some buzz about Reactive Extensions (Rx) as Linq over Events. That sounded cool, so I put the sticky note in the back of my mind to look into it later. Matthew’s presentation blew my mind a bit. Rx provides so much functionality and is so different from traditional event programming that I thought it would be helpful for me to retrace a few of the first necessary steps that would go into creating something as powerful as Rx. To that end, I starting writing a DisposableEventObserver class.

This class has two goals at this point:

- Replace the traditional EventHandler += new EventHandler() syntax with an IDisposable syntax.

- Add conditions to the Observer that determine if it will handle the events.

This is learning code. What I mean by this is that it is doubtful that the code I’m writing here will ever be used an a production application since Rx will be far more capable than what I write here. The purpose of this code is to help me (and maybe you) to gain insight into how Rx works. There are two notable Rx features that I will not be handling in v1 of DisposableEventObserver:

- Wrangling Asynchronous Events.

- Composability.

The first test I wrote looked something like this:

- [TestFixture]

- public class DisposableEventObserverTests

- {

- public event EventHandler<EventArgs> LocalEvent;

- [Test]

- public void SingleSubscriber()

- {

- // Arrange: Setup the test context

- var count = 0;

- // Act: Perform the action under test

- using (var observer = new DisposableEventObserver<EventArgs>(this.LocalEvent,

- (sender, e) => { count += 1; })

- {

- this.LocalEvent.Invoke(null, null);

- this.LocalEvent.Invoke(null, null);

- this.LocalEvent.Invoke(null, null);

- }

- // Assert: Verify the results of the action.

- Assert.That(count, Is.EqualTo(3));

- }

The test fixture served as my eventing object. I’m passing the event handler as a lambda. There were two interesting things about this approach. The first is that type required to pass this.LocalEvent is the same delegate type as the that required to pass the handler. The second is that this code did not work.

I was a little confused as to why the test didn’t pass. The lines inside the using block blew up with a NullReferenceException when I tried to reference this.LocalEvent. This is odd because inside the Observer I was definitely adding the handler to the event delegate. What’s going on here? It turns out that although Events look for all intents and purposes like a standard delegate field of the same type, the .NET framework treats them differently. Events can only be invoked by the class that owns them. The event fields themselves cannot reliably be passed as parameters.

I backed up a bit and tried this syntax:

- [Test]

- public void SingleSubscriber()

- {

- // Arrange: Setup the test context

- var count = 0;

- EventHandler<EventArgs> evt = delegate {count += 1; };

- // Act: Perform the action under test

- using (var observer = new DisposableEventObserver<EventArgs>(this, "LocalEvent", evt))

- {

- this.LocalEvent.Invoke(null, null);

- this.LocalEvent.Invoke(null, null);

- this.LocalEvent.Invoke(null, null);

- }

- // Assert: Verify the results of the action.

- Assert.That(count, Is.EqualTo(3));

- }

This test implies an implementation that uses reflection to find the event and add the handler. This worked the first time at bat, however I don’t like that magic string “LocalEvent” sitting there. I thought back to Josh Smith’s PropertyObserver and wondered if I could do something similar. Here’s a test that takes an expression that resolves to the event:

- [Test]

- public void Subscribe_Using_Lambda()

- {

- // Arrange: Setup the test context

- var count = 0;

- EventHandler<EventArgs> evt = delegate { count += 1; };

- // Act: Perform the action under test

- using (var observer = new DisposableEventObserver<EventArgs>(this, () => this.LocalEvent, evt))

- {

- this.LocalEvent.Invoke(null, null);

- this.LocalEvent.Invoke(null, null);

- this.LocalEvent.Invoke(null, null);

- }

- // Assert: Verify the results of the action.

- Assert.That(count, Is.EqualTo(3));

- }

This looks much better to me. Now I’ll get a compile-error if the event name or signature changes.

The next step is to add some conditionality to the event handling. While this class will not be Queryable like Rx, I’m going to use a similar Where() syntax to add conditions. I added the following test:

- [Test]

- public void Where_ConditionNotMet_EventShouldNotFire()

- {

- // Arrange: Setup the test context

- var count = 0;

- EventHandler<EventArgs> evt = delegate { count += 1; };

- // Act: Perform the action under test

- using (var observer = new DisposableEventObserver<EventArgs>(this,

- () => this.LocalEvent,

- evt).Where((sender, e) => e != null)

- )

- {

- this.LocalEvent.Invoke(null, null);

- this.LocalEvent.Invoke(null, null);

- this.LocalEvent.Invoke(null, null);

- }

- // Assert: Verify the results of the action.

- Assert.That(count, Is.EqualTo(0));

- }

The Where condition specifies that the event args cannot be null. In this case count should never be incremented. I had to make several changes to the internals of the Observer class to make this work. Instead of registering “evt” with the event handler I had to create an interceptor method inside the Observer to test the criteria. If the criteria are met then “evt” will be called. I implemented Where as an “Add” function over a collection of Func<object, TEventArgs, bool>.

The full implementation can be found here, and the tests and be found here.

When working with the ObservableCollection or INotifyCollectionChanged interface, it is common to see code like the following:

void OnCollectionChanged(object sender, NotifyCollectionChangedEventArgs e) { switch (e.Action) { case NotifyCollectionChangedAction.Add: HandleAddedItems(e); break; case NotifyCollectionChangedAction.Move: break; case NotifyCollectionChangedAction.Remove: HandleRemovedItems(e); break; case NotifyCollectionChangedAction.Replace: HandleReplacedItems(e); break; case NotifyCollectionChangedAction.Reset: HandleClearItems(e); break; } }

There’s nothing particularly wrong with this code except that it’s kind of bulky, and that it starts to crop up all over your application. There’s another problem that’s not immediately obvious. The “Reset” action only gets fired when the ObservableCollection is cleared, but it’s eventargs does not contain the items that were removed from the collection. If your logic calls for processing removed items when they’re cleared, the built-in API offers you nothing. You have to do your own shadow copy of the collection so that you can respond to the Clear() correctly.

For that reason I wrote and added ObservableCollectionHandler to manage these events for you. It accepts three kinds of delegates for responding to changes in the source collection: ItemAdded, ItemRemoved, and ItemReplaced actions. (It would be easy to add ItemMoved as well, but I have seldom had a need for that so I coded the critical path first.) The handler maintains a shadow copy of the list so that the ItemRemoved delegates are called in response to the Clear() command.

[Test] public void OnItemAdded_ShouldPerformAction() { // Arrange: Setup the test context int i = 0; var collection = new ObservableCollection<Employee>(); var handler = new ObservableCollectionHandler<Employee>(collection) .OnItemAdded(e => i++); // Act: Perform the action under test collection.Add(new Employee()); // Assert: Verify the results of the action. Require.That(i).IsEqualTo(1); }

Another common need with respect to ObservableCollections is the need to track which items were added, modified, and removed from the source collection. To facilitate this need I wrote the ChangeTracker class. ChangeTracker makes use of ObservableCollectionHandler to setup activities in response to changes in the source collection. ChangeTracker maintains a list of additions and removals from the source collection. It can also maintain a list of modified items assuming the items in the collection implement INotifyPropertyChanged.

Here is a sample unit test indicating it’s usage:

[Test] public void GetChanges_AfterAdd_ShouldReturnAddedItems() { // Arrange: Setup the test context var source = new ObservableCollection<Employee>(); var tracker = new ChangeTracker<Employee>(source); // Act: Perform the action under test var added = new Employee(); source.Add(added); // Assert: Verify the results of the action. var changes = tracker.GetChanges(ChangeType.All); Require.That(changes.Any()).IsTrue(); Require.That(tracker.HasChanges).IsTrue(); var change = changes.First(); Require.That(change).IsNotNull(); Require.That(change.Type).IsEqualTo(ChangeType.Add); Require.That(change.Value).IsTheSameAs(added); }

The full source code and unit tests can be found here.

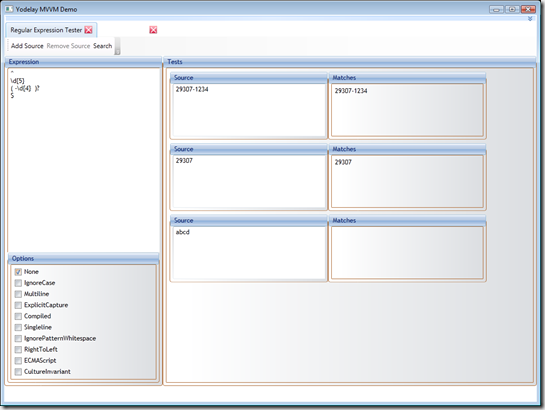

Yodelay has been updated. I’ve modified the Regular Expression tester so that it supports n-number of test contexts with a single expression. In addition, you can search regexlib.com for a useful expression.

This screen is fully implemented using MVVM. The search window serves as an example of using MVVM with dialogs.

I’ve also pulled in the themes from WpfThemes and integrated the ThemeManager.

Check it out!

I’ve been dealing with Enums and databases for awhile now. I think it’s something every LOB developer faces. Enums are developer-friendly because provide context to a delimited range of options in an easy to understand way. They do come with a couple of drawbacks however.

The first is that they’re not friendly for users. That Pascal-cased identifier looks great to a developer, but users aren’t really used to reading “RequireNumericData” and making sense out it. Since this post is supposed to be more about databases than UI, I’ll provide a quick snippet of code I use to get user-friendly descriptions of enum values:

public static class EnumService { public static string GetDescription(System.Type enumType, System.Enum fieldValue) { var enumFields = from element in enumType .GetFields(BindingFlags.Public | BindingFlags.Static) select element; var matchingFields = enumFields .Where(e => string.Compare(fieldValue.ToString(), e.Name) == 0); var field = matchingFields.FirstOrDefault(); string result = fieldValue.ToString() .SplitCompoundTerm() .ToTitleCase(); if (field != null) { var descriptionAttribute = field .GetCustomAttributes(typeof(DescriptionAttribute), true) .Cast<DescriptionAttribute>() .FirstOrDefault(); if (descriptionAttribute != null) result = descriptionAttribute.Description; } return result; } public static string GetDescription<T>(T fieldValue) { var fv = fieldValue as System.Enum; var result = GetDescription(typeof (T), fv); return result; } }

I refer to two extension methods on the string class, SplitCompoundTerm() and ToTitleCase(). Here is the code for them:

public static string SplitCompoundTerm (this string source) { var chars = source.ToCharArray(); var upperChars = from element in chars where Char.IsUpper(element) select element; var queue = new Queue<char>(); upperChars.ForEach(queue.Enqueue); var newTerm = new List<Char>(); var lastChar = default(char); foreach (var element in chars) { if (queue.Count() > 0 && queue.Peek() == element) { if (lastChar != ' ') newTerm.Add(' '); newTerm.Add(queue.Dequeue()); } else { newTerm.Add(element); } lastChar = element; } var newCharArray = newTerm.ToArray(); var temp = new string(newCharArray); var result = temp.Trim(); return result; } public static string ToTitleCase(this string source) { var result = CultureInfo.InvariantCulture.TextInfo.ToTitleCase(source); return result; }

SlitCompoundTerm() bothers me a bit. All it’s doing is looking for upper-cased characters in the middle of the term and inserting a space before them. I’m sure there’s a way to do that with regular expressions, but I haven’t gotten around to figuring that out yet.

A second drawback is that you often have to decide how to store enum values in a database. Do you store the numeric value? The text? Do you create a table that contains both the numeric and text values? Would that be one table for each enum, or one master table for all enums and an addition “enum type” column? Storing the numeric value is nice because it’s easy, but it’s very hard to report on. If your enum values are binary, then a single numeric value is going to be great for searching. If you need to report on the data you really want text. Text creates its own issues because you can’t really do a search on all records with a state of “Pending | Ready” using text. All of the developers problems are more easily solved by the numeric value, and all of the reporting problems are more easily solved by descriptive text. I’m sure some of you won’t like what I’m about to propose, but here it is: I store both values. Every enum value becomes not one, but two fields on the record in the RDBMS. From a data integrity standpoint, I can get away with this because I route all my data access calls through a well-defined data access layer. My apps never manipulate the RDBMS directly. While this approach solves my problems, it does come with the cost of increased data storage. This may or may not be an issue for you. It hasn’t been one for me so far.

Happy Coding!