I was reading this stack overflow question: How can I solve this: Nhibernate Querying in an n-tier architecture?

The author is trying to abstract away NHibernate and is being counseled rather heavily not to do so. In the comments there are a couple of blog entries by Ayende on this topic:

The false myth of encapsulating data access in the DAL

Architecting in the pit of doom the evils of the repository abstraction layer

Ayende is pretty down on abstracting away NHIbernate. The answers on StackOverflow push the questioner toward just standing up an in-memory Sqlite instance and executing the tests against that.

The Sqlite solution is pretty painful with complex databases. It requires that you set up an enormous amount of data that isn’t really germane to your test in order to satisfy FK and other constraints. The ceremony of creating this extra data clutters the test and obscures the intent. To test a query for employees who are managers, I’d have to create Departments and Job Titles and Salary Types etc., etc., etc.. Dis-like.

What problem am I trying to solve?

In the .NET space developers tend to want to use LINQ to access, filter, and project data. NHibernate (partially) supports LINQ via an extension method off of ISession. Because ISession.Query<T> is an extension method, it is not stubbable with free mocking tools such as RhinoMocks, Moq, or my favorite: NSubstitute. This is why people push you to use the Sqlite solution—because the piece of the underlying interface that you want to use most of the time is not built for stubbing.

I think that a fundamental problem with NHibernate is that it is trying to serve 2 masters. On the one hand it wants to be a faithful port of Hibernate. On the other, it wants to be a good citizen for .NET. Since .NET has LINQ and Java doesn’t, the support for LINQ is shoddy and doesn’t really fit in well the rest of the API design. LINQ support is an “add-on” to the Java api, and not a first-class citizen. I think this is why it was implemented as an extension method instead of as part of the ISession interface.

I firmly disagree with Ayende on Generic Repository. However, I do agree with some of the criticisms he offers against specific implementations. I think his arguments are a little bit of straw man, however. It is possible to do Generic Repository well.

I prefer to keep my IRepository interface simple:

public interface IRepository : IDisposable { IQueryable<T> Find<T>() where T: class; T Get<T>(object key) where T : class; void Save<T>(T value) where T: class; void Delete<T>(T value) where T: class; ITransaction BeginTransaction(); IDbConnection GetUnderlyingConnection(); }

Here are some of my guidelines when using a Generic Repository abstraction:

- My purpose in using Generic Repository is not to “hide” the ORM, but

- to ease testability.

- to provide a common interface for accessing multiple types of databases (e.g., I have implemented IRepository against relational and non-relational databases) Most of my storage operations follow the Retrieve-Modify-Persist pattern, so Find<T>, Get<T>, and Save<T> support almost everything I need.

- I don’t expose my data models outside of self-contained operations, so Attach/Detach are not useful to me.

- If I need any of the other advanced ORM features, I’ll use the ORM directly and write an appropriate integration test for that functionality.

- I don’t use Attach/Detach, bulk operations, Flush, Futures, or any other advanced features of the ORM in my IRepository interface. I prefer an interface that is clean, simple, and useful in 95% of my scenarios.

- I implemented Find<T> as an IQueryable<T>. This makes it easy to use the Specification pattern to perform arbitrary queries. I wrote a specification package that targets LINQ for this purpose.

- In production code it is usually easy enough to append where-clauses to the exposed IQueryable<T>

- For dynamic user-driven queries I will write a class that will convert a flat request contract into the where-clause needed by the operation.

- I expose the underlying connection so that if someone needs to execute a sproc or raw sql there is a convenient way of doing that.

Anemic Data Model is my term for a domain model indicating entities and their relationships to other entities, but having little or no business behavior. It basically serves as a contract for a data store. It’s purpose is structural, and often to provide ease of queryability.

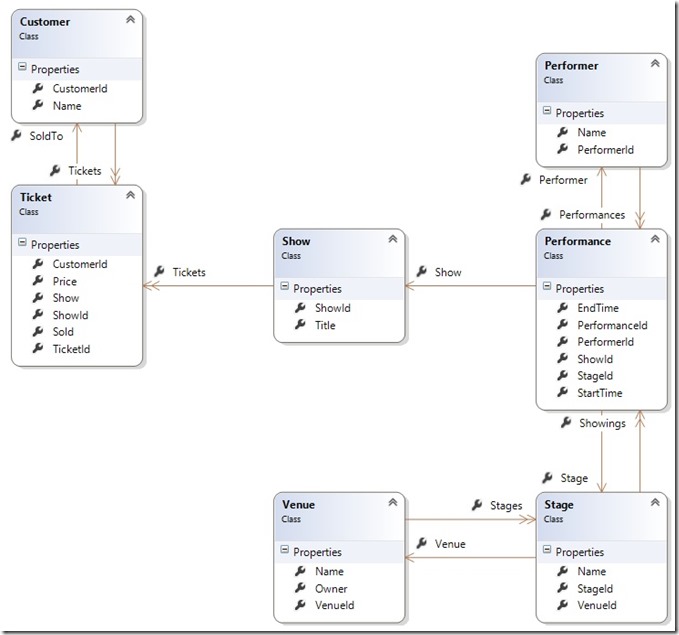

In ShowPlanner, the domain is about planning and selling tickets to musical events called “shows.” Venues contain one or more Stages, and a Show can consist of one or more Performances on one or more Stages at a Venue. Tickets are sold to a Show.

This data model represents the universe of entities, attributes, and relationships that the ShowPlanner application will interact with at this time. These models have no methods or functions, no behavior of any kind.

The Problem of Behavior

Why no behavior? Putting behavior in domain models raises some difficult design questions. How do the domain objects get their dependencies? Which domain object should the method or methods that control behavior go in?

Let’s take a simple case. Suppose you want to model what happens when a Ticket is purchased by a Customer. When a show is created, a number of tickets are created as well. When a Customer buys a Ticket, the ticket will be marked as sold.

Ownership

Generally speaking it’s very seldom that we write basic CRUD applications in an enterprise environment. Application behavior tends to live in the relationships and what happens when certain kinds of relationships are created and destroyed. This means that application operations often involve the state of multiple domain objects directly and indirectly involved in the relationship that is changing.

There are several possible ways to model this operation.

public class Customer { public int? CustomerId { get; set; } public IList<Ticket> Tickets { get; set; } [Required] public string Name { get; set; } public void Purchase(Ticket ticket) { ticket.SoldTo = this; ticket.Sold = true; } }

Or

public class Ticket { public int? TicketId { get; set; } public int? ShowId { get; set; } [Required] public Show Show { get; set; } public int? CustomerId { get; set; } public Customer SoldTo { get; set; } public bool? Sold { get; set; } [Required] public decimal Price { get; set; } public void SellTo(Customer customer) { this.Sold = true; this.SoldTo = customer; } }

Or

public class Show { public int? ShowId { get; set; } public IList<Ticket> Tickets { get; set; } [Required] public string Title { get; set; } public void SellTicket(Customer customer, Ticket ticket) { ticket.Sold = true; ticket.SoldTo = customer; } }

Which domain object should own the method for selling the ticket? It will depend on who you ask. When a given operation affects multiple entities in the Domain, there is no objective way to decide which entity gets the behavior. The method performing the operation has no natural home.

Dependencies

Let’s assume you secure agreement that Customer should own this operation. You’re happily coding along on something else when a few weeks later your customer tells you that when the ticket is sold a message needs to be sent to a billing system. The messaging system is represented by an interface called IMessageSender. What happens in the domain model now? How does the Customer class get a reference to the MessageSender implementation?

You could do this I guess:

public void Purchase(Ticket ticket, IMessageSender messageSender) { ticket.SoldTo = this; ticket.Sold = true; messageSender.Enqueue(new BillForTicketMessage(this, ticket)); }

But that’s an ugly road to walk down. You’ll start expanding dependencies when you need to add logging, authorization, validation, and a host of other concerns. Suddenly what started out as a very simple method on domain model full of complexity.

Constructor injection is not viable if I’m expecting to use an ORM to retrieve my Customer instance. Assuming you could make that work, you have to consider that with the addition of each new operation involving Customer, you’ll be adding still more dependencies which will turn Customer into more of a GOD class than will be supportable.

Wouldn’t’ design-by-composition work better?

Designing the Domain Models

I said earlier that “domain models as merely a means of persisting the state of the application.” To my way of thinking, this is their core purpose. To that end, they should effectively act as a contract to the underlying data store used by your application. They should support both queryability and data modification and be well-organized.

Queryability

To support queryability, data models should be fully connected. This just means that any relationships between entities should be referentially expressed. Prefer Performance.Show over Performance.ShowId. Some ORM’s such as Entity Framework support using both the Id and the reference. For others, such as NHibernate, having both properties is an issue. When faced with a choice, prefer a reference over an Id.

Data Modification

To support data modification, your data models should contain all the fields used in the database. I was writing an integration test recently and I needed to create some test data in Sql Server. As I tried to insert one of my entities, I discovered that field required by the database was not actually on the model. The original developer had only populated the fields he needed for the specific operation he was writing which resulted in additional work and testing for me. It also exposed a lack of integration test coverage for any operation involving this entity as it was impossible to create new records using existing code.

Organization

Data models are the center of the onion. They should be in their own assembly or package both to make them easier to find and modify, and to prevent other developers from violating the Dependency Depth principle by referencing higher layers.

Do’s

- Put your data models in their own project or assembly. This makes them easy to find. They are the innermost circle in your application, so this project should have next to no dependencies on anything else.

- If you’re tools set supports it, maintain a class diagram of the models and their relationships. The diagram above was generated by Visual Studio.

- Prefer referential relationships over using Id’s to identify related data. Use both if your ORM supports it.

- This should go without saying, but use an ORM.

- In the anal-retentive list of things to consider

- List the primary key first.

- List single-entity references second.

- List collection references third.

- List the entity’s fields last.

- List audit data (create date, create user, etc) dead last.

- Alphabetize fields. Why? Because with larger data models it gets really hard to find the field you’re looking for in an unsorted list. This is an easy thing to habitualize and saves a good bit of headache down the road.

- In .NET, use System.ComponentModel.DataAnnotations to attribute your models metadata when it is known.

- Especially use StringLength because it produces a nicer error message than “String or binary data would be truncated” when using SQL Server as the database.

Don’ts

- Don’t put behavior on the models.

- Don’t accept dependencies in the models.

- Don’t put ORM-specific attributes on the models unless you have no other choice.

- Don’t put anything on the models that isn’t actually in your data store.

- This is another way of saying “don’t put behavior on the models.” But I’ve seen developers put properties on models that they calculate and set in the course of one operation and that isn’t used in other operations. This breaks down when another operation wants to use the calculated value.

- Don’t use data models in your UI. In web applications, this means don’t use data models in your pages and controllers. Use View Models and some sort of Request-Response api instead.

This is Part 2 of a series

- Part 1: Introduction

- Part 2: Anemic Data Models

Disclaimer: This series of articles is intentionally opinionated. From an architectural perspective I am much more interested in clearly defining each layer than I am in the choice of specific implementation pattern. However, an architecture doesn’t exist without the details that make it up, so I must choose some implementation pattern so that the architecture can be revealed. Since I’m the one writing the series, I’ll choose implementation patterns I like. ![]() If you have a better idea, take a fork of ShowPlanner and share it!

If you have a better idea, take a fork of ShowPlanner and share it!

At Redacted Associates, we’ve been having a discussion about whether we should use the Generic Repository pattern on top of NHibernate. We have a simple IRepository interface as follows:

For my part, I like don’t like to spend a lot of time worrying about the way my ORM interacts with the database. I prefer to spend my design-energy around how to architect the application such that interacting with the database is a minor implementation detail, almost an afterthought even.

At least one of my co-workers disagrees, and has given a really good argument for a specific case when using direct features in NHibernate saved some work. This discussion has spurred me to ask myself “what are the most important features of an ORM?” and “at what level of abstraction should we work with an ORM?” There’s no way to answer these questions without identifying your purpose in using the ORM to begin with. With that in mind, I decided to categorize the features we all look for in an ORM and compare them to our Generic Repository implementation.

ORM features basically fall into one of 3 categories:

-

Queryability

-

Linq Provider

In .NET, Linq remains the most discoverable way to query an ORM. NHibernate has the QueryOver api, but I find it to be hopelessly unreadable anything but the simplest query.

-

Specification pattern

The easiest specification pattern in .NET relies on Linq. It’s a very nice way to allow api clients to construct their own queries without concerning themselves with database schema details. In an SOA architecture, it provides a flat contract to support complex query results. It minimizes the number of service methods because you don’t have to write GetCustomersByNameAndCityAndProduct.

-

Fully mapped relationships between objects.

I depend on having a fully expressed query model to use for linq queries. Getting the right data to work with often involves a number of database relationships and it’s impossible to predict when some new object or property will be needed to satisfy a query. It’s easiest to ensure that the model fully expresses the database and that all fields and relationships are present in the model. The model should fully and accurately express the database schema.

-

Id navigation properties.

Id navigation properties as a companion to the object relationship properties are really handy. They can reduce query-syntax clutter quite a bit. Employee.ManagerId is cleaner than Employee.Manager.Id. Some ORM’s will pull back the whole Manager to get the Id. I hate that.

-

Full support for all relationship types (one-to-one, one-to-many, many-to-many).

These relationships are standard in relational db’s. Any Object-Relational Mapper should support them.

-

Lazy Loading

-

-

Behavior

-

Cascade mappings.

This is not personally a value to me, but I recognize that in some cases it’s useful.

-

Trigger-behavior.

This sort of behavior is useful when you want the ORM to handle things like audit fields, soft deletes, or log table entries.

-

Sql-Efficiencies.

Sometimes pulling back large datasets and mapping them to in-memory objects can be very expensive. If performance is a concern, it’s nice to be able to have the ORM optimize the operation. NHibernate’s “Merge” operation is a good example of this.

-

-

Testability

-

In-memory testability

-

Mockable/Stubbable

-

I composed the following table listing the features we are seeking from an ORM and how each tool fares against our needs.

NHibernate |

Entity Framework |

Generic Repository |

Micro ORMs |

|

Linq Provider |

Not Fully Implemented | Fully Implemented | Depends on ORM | No |

Specification Pattern |

Easy to implement against partially implemented Linq provider. Hard otherwise. | Easy to implement. | Depends on ORM | No |

Can Fully Map Relationships |

Yes | Yes | Depends on ORM | No |

Id Navigation Properties |

Not without extreme (and not very useful) hacks | Yes | Depends on ORM | Yes |

Full support for relationship types |

One-to-one doesn’t work well. Results in N+1 queries on one side of the relationship | * Haven’t tested this. | Depends on ORM | Sort of |

Lazy Loading |

Yes | Yes | Depends on ORM | No |

Cascade Mappings |

Yes | Yes | Depends on ORM | No |

Trigger Behavior |

Yes | Yes | Depends on ORM | No |

Sql Efficiences |

Yes | Yes | Depends on ORM | No |

In-memory testability |

Yes, provided you use SqlLite. | Yes, provided you use SqlCompact edition—or you can interface your DbContext. | Yes | No |

Mockable-Stubbable |

Mostly. Some of the methods you use on ISession are in fact extension methods. .Query is an extension method which is problematic since that’s an obvious one I’d like to stub. | Mostly. Methods such as .Include() are extension methods with no in-memory counterpart. If I really need to use .Include() I’ll write an integration test instead. | Yes | No |

Notes |

|

|

|

Fantastic for quick and dirty CRUD on single tables. Not so great otherwise. |

Takeaways

My advice is to use Generic Repository except when you need to get close to the metal of your ORM for some fine-grained control over your data access.

The Problem

I’ve been struggling for awhile to figure out how to get Entity Framework to set and unset application roles in Sql Server when opening and before closing a connection. The problem is that ConnectionState does not provide a Closing state that fires before a connection is closed.

It was suggested to me to turn of connection pooling. Errrr, no. We want connection pooling for our applications. I also don’t want to have to manually open and close the connection every time I create a DbContext. That’s just messy and irritating.

The next obvious thing to do would be to create a DbConnectionDecorator to wrap the existing database connection and expose the Closing event. This proved to be very difficult because Entity Framework does not expose when and how it opens connections.

Grrrrrr.

The Solution

What’s that you say? I can implement my own EntityFramework Provider? There’s a provider wrapper toolkit I can plug into to do this? Awesome!

Oh wait—that looks really, really, REALLY complicated? You mean I can’t just decorate a single object? I have to decorate a whole family of objects?

Hmmmm.

Alright, tell you what I’ll do. I’ll implement the provider wrapper using the toolkit as best I can—but then I’m going to strip away everything I don’t actually need. Besides, if I just make the various data related events observable, it’s nothing to Trace the output. And Cacheing can easily be added as a IDbContext Decorator anyway. I don’t really get why that should be done at the Provider level.

Configuring Your Application to use the Provider

To use the new provider, first install the package. At application startup, you’ll need to register the provider and tell it which other provider you are wrapping. The registration process will set the ObservableConnectionFactory as the default connection factory used by EF unless you pass the optional setAsDefault:false.

Consuming the Provider Events

ObservableConnection exposes several new events, including Opening, Opened, Failed, Closing, and Closed. However, to subscribe to those events directly requires that you cast the DbConnection exposed by EF to ObservableDbConnection, which strikes me as a little cumbersome (not to mention a violation of the LSP). My first use case demands that I handle the Opening and Closing events the same way for every DbConnection application-wide. To that end, ObservableDbConnection (and ObservableDbCommand) pushes its event messages onto a static class called Hub.

Guarantees

This code is brand-spanking new and it hasn’t had time to bake yet. I’m using it, but it’s entirely possible that there are unforeseen problems. Feel free to report issues to and/or contribute to the open-source project on BitBucket. Until then, know that it has been rigorously test and that it works on my machine.

I’ve added a LookUp property to the InterceptionContext to make it easier to see which rows were affected during the After() interception phase.

public class InterceptionContext { public DbContextBase DbContext { get; internal set; } public ObjectContext ObjectContext { get; internal set; } public ObjectStateManager ObjectStateManager { get; internal set; } public DbChangeTracker ChangeTracker { get; internal set; } public IEnumerable<DbEntityEntry> Entries { get; internal set; } public ILookup<EntityState, DbEntityEntry> EntriesByState { get; internal set; } ... snipped for brevity

EntriesByState is populated prior to the call to Before(). Added and Modified entities will have their EntityState reverted to UnChanged after SaveChanges() is called. EntriesByState preserves the original state of the entities so that After() interceptors can make use of new Id’s and such.

I just wanted to give some link-love to the blogs that were helpful to me in implementing the Specification Pattern. Stay tuned for Isg.Specifications on NuGet!

http://davedewinter.com/2009/05/31/linq-expression-trees-and-the-specification-pattern/

http://blogs.msdn.com/b/meek/archive/2008/05/02/linq-to-entities-combining-predicates.aspx

http://iainjmitchell.com/blog/?p=550

http://huyrua.wordpress.com/2010/07/13/entity-framework-4-poco-repository-and-specification-pattern/

http://devlicio.us/blogs/jeff_perrin/archive/2006/12/13/the-specification-pattern.aspx

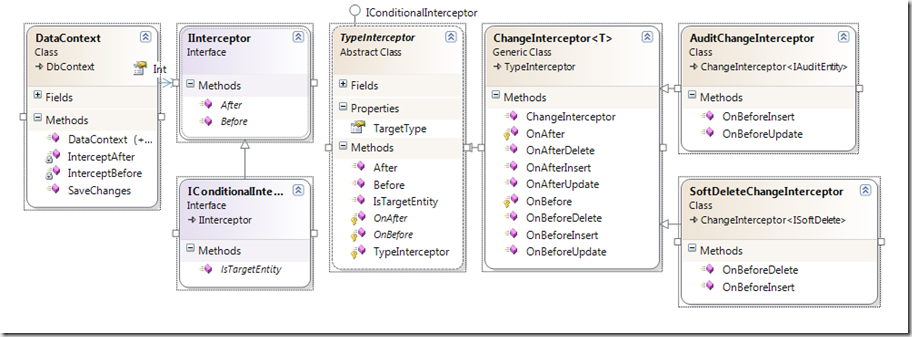

Interceptors are a great way to handle some repetitive and predictable data management tasks. NHibernate has good support for Interceptors both at the change and query levels. I wondered how hard it would be to write interceptors for the new EF4 CTP and was surprised at how easy it actually was… well, for the change interceptors anyway. It looks like query interceptors would require a complete reimplementation of the Linq provider—not something I feel like undertaking right now.

On to the Code!

This is the first interface we’ll use to create a class that can respond to changes in the EF4 data context.

- namespace Yodelay.Data.Entity

- {

- /// <summary>

- /// Interface to support taking some action in response

- /// to some activity taking place on an ObjectStateEntry item.

- /// </summary>

- public interface IInterceptor

- {

- void Before(ObjectStateEntry item);

- void After(ObjectStateEntry item);

- }

- }

We’ll also use this interface to add some conditional execution support.

- namespace Yodelay.Data.Entity

- {

- /// <summary>

- /// Adds conditional execution to an IInterceptor.

- /// </summary>

- public interface IConditionalInterceptor : IInterceptor

- {

- bool IsTargetEntity(ObjectStateEntry item);

- }

- }

The first interceptor I want to write is one that manages four audit columns automatically. First I need an interface that provides the audit columns:

- public interface IAuditEntity

- {

- DateTime InsertDateTime { get; set; }

- DateTime UpdateDateTime { get; set; }

- string InsertUser { get; set; }

- string UpdateUser { get; set; }

- }

The EF4 DbContext class provides an override for SaveChanges() that I can use to start handling the events. I decided to subclass DbContext and add the interception capability to the new class. I snipped the constructors for brevity, but all of the constructors from the base class are bubbled.

- public class DataContext : DbContext

- {

- private readonly List<IInterceptor> _interceptors = new List<IInterceptor>();

- public List<IInterceptor> Interceptors

- {

- get { return this._interceptors; }

- }

- private void InterceptBefore(ObjectStateEntry item)

- {

- this.Interceptors.ForEach(intercept => intercept.Before(item));

- }

- private void InterceptAfter(ObjectStateEntry item)

- {

- this.Interceptors.ForEach(intercept => intercept.After(item));

- }

- public override int SaveChanges()

- {

- const EntityState entitiesToTrack = EntityState.Added |

- EntityState.Modified |

- EntityState.Deleted;

- var elementsToSave =

- this.ObjectContext

- .ObjectStateManager

- .GetObjectStateEntries(entitiesToTrack)

- .ToList();

- elementsToSave.ForEach(InterceptBefore);

- var result = base.SaveChanges();

- elementsToSave.ForEach(InterceptAfter);

- return result;

- }

I only want the AuditChangeInterceptor to fire if the object implements the IAuditEntity interface. I could have directly implemented IConditionalInterceptor, but I decided to extract the object-type criteria into a super-class.

- public abstract class TypeInterceptor : IConditionalInterceptor

- {

- private readonly System.Type _targetType;

- public Type TargetType { get { return _targetType; }}

- protected TypeInterceptor(System.Type targetType)

- {

- this._targetType = targetType;

- }

- public virtual bool IsTargetEntity(ObjectStateEntry item)

- {

- return item.State != EntityState.Detached &&

- this.TargetType.IsInstanceOfType(item.Entity);

- }

- public void Before(ObjectStateEntry item)

- {

- if (this.IsTargetEntity(item))

- this.OnBefore(item);

- }

- protected abstract void OnBefore(ObjectStateEntry item);

- public void After(ObjectStateEntry item)

- {

- if (this.IsTargetEntity(item))

- this.OnAfter(item);

- }

- protected abstract void OnAfter(ObjectStateEntry item);

- }

I also decided that the super-class should provide obvious method-overrides for BeforeInsert, AfterInsert, BeforeUpdate, etc.. For that I created a generic class that sub-classes TypeInterceptor and provides friendlier methods to work with.

- public class ChangeInterceptor<T> : TypeInterceptor

- {

- #region Overrides of Interceptor

- protected override void OnBefore(ObjectStateEntry item)

- {

- T tItem = (T) item.Entity;

- switch(item.State)

- {

- case EntityState.Added:

- this.OnBeforeInsert(item.ObjectStateManager, tItem);

- break;

- case EntityState.Deleted:

- this.OnBeforeDelete(item.ObjectStateManager, tItem);

- break;

- case EntityState.Modified:

- this.OnBeforeUpdate(item.ObjectStateManager, tItem);

- break;

- }

- }

- protected override void OnAfter(ObjectStateEntry item)

- {

- T tItem = (T)item.Entity;

- switch (item.State)

- {

- case EntityState.Added:

- this.OnAfterInsert(item.ObjectStateManager, tItem);

- break;

- case EntityState.Deleted:

- this.OnAfterDelete(item.ObjectStateManager, tItem);

- break;

- case EntityState.Modified:

- this.OnAfterUpdate(item.ObjectStateManager, tItem);

- break;

- }

- }

- #endregion

- public virtual void OnBeforeInsert(ObjectStateManager manager, T item)

- {

- return;

- }

- public virtual void OnAfterInsert(ObjectStateManager manager, T item)

- {

- return;

- }

- public virtual void OnBeforeUpdate(ObjectStateManager manager, T item)

- {

- return;

- }

- public virtual void OnAfterUpdate(ObjectStateManager manager, T item)

- {

- return;

- }

- public virtual void OnBeforeDelete(ObjectStateManager manager, T item)

- {

- return;

- }

- public virtual void OnAfterDelete(ObjectStateManager manager, T item)

- {

- return;

- }

- public ChangeInterceptor() : base(typeof(T))

- {

- }

- }

Finally, I created subclassed ChangeInterceptor<IAuditEntity>.

- public class AuditChangeInterceptor : ChangeInterceptor<IAuditEntity>

- {

- public override void OnBeforeInsert(ObjectStateManager manager, IAuditEntity item)

- {

- base.OnBeforeInsert(manager, item);

- item.InsertDateTime = DateTime.Now;

- item.InsertUser = System.Threading.Thread.CurrentPrincipal.Identity.Name;

- item.UpdateDateTime = DateTime.Now;

- item.UpdateUser = System.Threading.Thread.CurrentPrincipal.Identity.Name;

- }

- public override void OnBeforeUpdate(ObjectStateManager manager, IAuditEntity item)

- {

- base.OnBeforeUpdate(manager, item);

- item.UpdateDateTime = DateTime.Now;

- item.UpdateUser = System.Threading.Thread.CurrentPrincipal.Identity.Name;

- }

- }

I plugged this into my app, and it worked on the first go.

Another common scenario I encounter is “soft-deletes.” A “soft-delete” is a virtual record deletion that does not actual remove the record from the database. Instead it sets an IsDeleted flag on the record, and the record is then excluded from other queries. The problem with soft-deletes is that developers and report writers always have to remember to add the “IsDeleted == false” criteria to every query in the system that touches the affected records. It would be great to replace the standard delete functionality with a soft-delete, and to modify the IQueryable to return only records for which “IsDeleted == false.” Unfortunately, I was unable to find a clean way to add query-interceptors to the data model to keep deleted records from being returned. However, I was able to get the basic soft-delete ChangeInterceptor to work. Here is that code.

- public interface ISoftDelete

- {

- bool IsDeleted { get; set; }

- }

- public class SoftDeleteChangeInterceptor : ChangeInterceptor<ISoftDelete>

- {

- public override void OnBeforeInsert(ObjectStateManager manager, ISoftDelete item)

- {

- base.OnBeforeInsert(manager, item);

- item.IsDeleted = false;

- }

- public override void OnBeforeDelete(ObjectStateManager manager, ISoftDelete item)

- {

- if (item.IsDeleted)

- throw new InvalidOperationException("Item is already deleted.");

- base.OnBeforeDelete(manager, item);

- item.IsDeleted = true;

- manager.ChangeObjectState(item, EntityState.Modified);

- }

- }

Here’s the complete diagram of the code:

EF4 has come a long way with respect to supporting extensibility. It still needs query-interceptors to be feature-parable with other ORM tools such as NHibernate, but I suspect that it is just a matter of time before the MS developers get around to adding that functionality. For now, you can use the interceptor model I’ve demo’ed here to add functionality to your data models. Perhaps you could use them to add logging, validation, or security checks to your models. What can you come up with?

Configuring ORM’s through fluent api calls is relatively new to me. For the last three years or so I’ve been using EF1 and Linq-To-Sql as my data modeling tools of choice. My first exposure to a code-first ORM tool came in June when I started working with Fluent NHibernate. As interesting as that has been, I hadn’t really be faced with the issue of proper configuration because I’ve had someone on our team that does it easily. This weekend I started working on a sample project using the EF4 CTP, and the biggest stumbling block has been modeling the relationships.

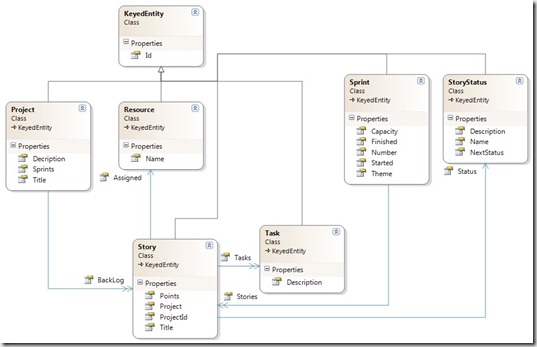

The context code is a SCRUM process management app I’m writing. Here’s the model:

The relationship I tried to model was the one between Project and Story via the Backlog property. Since “Project” is the first entity I needed to write, my natural inclination was to model the relationship to “Story” first.

- public class DataContext : DbContext

- {

- public DbSet<Project> Projects { get; set; }

- public DbSet<Resource> Resources { get; set; }

- public DbSet<Sprint> Sprints { get; set; }

- public DbSet<Story> Stories { get; set; }

- public DbSet<StoryStatus> StoryStatuses { get; set; }

- public DbSet<Task> Tasks { get; set; }

- protected override void OnModelCreating(System.Data.Entity.ModelConfiguration.ModelBuilder builder)

- {

- base.OnModelCreating(builder);

- builder

- .Entity<Project>()

- .HasMany(e => e.BackLog);

I knew that “Story” would be slightly more complex because it has two properties that map back to “Project.” These are the “Project” property ,and the “ProjectId” property. Some of the EF4 samples I found refer to a “Relationship” extension method that I was unable to find in the API, so I was fairly confused. I finally figured out what I needed to do by reading this post from Scott Hanselman, though he doesn’t specifically highlight the question I was trying to answer.

This is the mapping code I created for “Story:”

- builder.Entity<Story>()

- .HasRequired(s => s.Project)

- .HasConstraint((story, project) => story.ProjectId == project.Id);

and this is the code I’m using to create a new Story for a project:

- [HttpPost]

- public ActionResult Create(Story model)

- {

- using (var context = new DataContext())

- {

- var project = context.Projects.Single(p => p.Id == model.ProjectId);

- project.BackLog.Add(model);

- context.SaveChanges();

- return RedirectToAction("Backlog", "Story", new {id = project.Id});

- }

- }

When I tried to save the story, the data context threw various exceptions. I thought I could avoid the problem by rewriting the code so that I was just adding the story directly to the “Stories” table on the DataContext (which I think will ultimately be the right thing as it saves an unnecessary database call), but that would have been hacking around the problem and not really understanding what was wrong with what I was doing. It just took some staring at Scott Hanselman’s code sample for awhile to realize what was wrong with my approach. Before I explain it, let me show you the mapping code that works.

- protected override void OnModelCreating(System.Data.Entity.ModelConfiguration.ModelBuilder builder)

- {

- base.OnModelCreating(builder);

- builder

- .Entity<Project>()

- .Property(p => p.Title)

- .IsRequired();

- // api no longer has Relationship() extension method.

- builder.Entity<Story>()

- .HasRequired(s => s.Project)

- .WithMany(p => p.BackLog)

- .HasConstraint((story, project) => story.ProjectId == project.Id);

- }

Notice what’s missing? I completely yanked the mapping of “Project->Stories” from the “Project” model’s perspective. Instead, I map the one-to-many relationship from the child-entity’s perspective, i.e., “Story.” Here’s how to read the mapping.

- // api no longer has Relationship() extension method.

- builder.Entity<Story>() // The entity story

- .HasRequired(s => s.Project) // has a required property called "Project" of type "Project"

- .WithMany(p => p.BackLog) // and "Project" has a reference back to "Story" through it's "Backlog" collection property

- .HasConstraint((story, project) => story.ProjectId == project.Id); // and Story.ProjectId is the same as Story.Project.Id

- ;

The key here is understanding that one-to-many relationships must be modeled from the perspective of the child-entity.