The intro is in French, but the talk is in English. Enjoy!

USI 2010 : conférence incontournable du l’IT en France

Rendez-vous annuel des Geeks et des Boss souhaitant une informatique qui transforme nos sociétés, USI est une conférence de 2 jours sur les sujets IT : Architecture de SI, Cloud Computing, iPhone, Agile, Lean management, Java, .net… USI 2010 a rassemblé 500 personnes autour d’un programme en 4 thèmes : Innovant, Durable, Ouvert et Valeur.

Plus d’informations sur www.universite-du-si.com

I’ve been exposed to some better repository organization lately. In response to what I’ve learned, I’ve updated the yodelay project structure to make it easier to build with the external dependencies. It seems obvious in retrospect :-). The new structure looks like this:

/ // source root

/src // directory for project source code

/libs // directory for non-Framework assembly dependencies

This should make it possible to download and build whether or not you have already installed NUnit and Rhino mocks on your machine.

Scott Gu just announced a new view engine called “Razor” for ASP .NET MVC, and it looks really sweet! Check it out here. I can’t wait to get my hands on the beta!

When working with the ObservableCollection or INotifyCollectionChanged interface, it is common to see code like the following:

void OnCollectionChanged(object sender, NotifyCollectionChangedEventArgs e) { switch (e.Action) { case NotifyCollectionChangedAction.Add: HandleAddedItems(e); break; case NotifyCollectionChangedAction.Move: break; case NotifyCollectionChangedAction.Remove: HandleRemovedItems(e); break; case NotifyCollectionChangedAction.Replace: HandleReplacedItems(e); break; case NotifyCollectionChangedAction.Reset: HandleClearItems(e); break; } }

There’s nothing particularly wrong with this code except that it’s kind of bulky, and that it starts to crop up all over your application. There’s another problem that’s not immediately obvious. The “Reset” action only gets fired when the ObservableCollection is cleared, but it’s eventargs does not contain the items that were removed from the collection. If your logic calls for processing removed items when they’re cleared, the built-in API offers you nothing. You have to do your own shadow copy of the collection so that you can respond to the Clear() correctly.

For that reason I wrote and added ObservableCollectionHandler to manage these events for you. It accepts three kinds of delegates for responding to changes in the source collection: ItemAdded, ItemRemoved, and ItemReplaced actions. (It would be easy to add ItemMoved as well, but I have seldom had a need for that so I coded the critical path first.) The handler maintains a shadow copy of the list so that the ItemRemoved delegates are called in response to the Clear() command.

[Test] public void OnItemAdded_ShouldPerformAction() { // Arrange: Setup the test context int i = 0; var collection = new ObservableCollection<Employee>(); var handler = new ObservableCollectionHandler<Employee>(collection) .OnItemAdded(e => i++); // Act: Perform the action under test collection.Add(new Employee()); // Assert: Verify the results of the action. Require.That(i).IsEqualTo(1); }

Another common need with respect to ObservableCollections is the need to track which items were added, modified, and removed from the source collection. To facilitate this need I wrote the ChangeTracker class. ChangeTracker makes use of ObservableCollectionHandler to setup activities in response to changes in the source collection. ChangeTracker maintains a list of additions and removals from the source collection. It can also maintain a list of modified items assuming the items in the collection implement INotifyPropertyChanged.

Here is a sample unit test indicating it’s usage:

[Test] public void GetChanges_AfterAdd_ShouldReturnAddedItems() { // Arrange: Setup the test context var source = new ObservableCollection<Employee>(); var tracker = new ChangeTracker<Employee>(source); // Act: Perform the action under test var added = new Employee(); source.Add(added); // Assert: Verify the results of the action. var changes = tracker.GetChanges(ChangeType.All); Require.That(changes.Any()).IsTrue(); Require.That(tracker.HasChanges).IsTrue(); var change = changes.First(); Require.That(change).IsNotNull(); Require.That(change.Type).IsEqualTo(ChangeType.Add); Require.That(change.Value).IsTheSameAs(added); }

The full source code and unit tests can be found here.

“We have to do {Insert Questionable Practice Here} in order to be consistent with the rest of the application.”

I’m so tired of hearing this statement. When a statement such as this is uttered, it is intended to do three things: 1) brush aside the necessity for a design change, 2) give the illusion of admitting that something is wrong with the current design (or lack thereof) while at the same time 3) justifying the current design.

“Consistency” is not a design principle. “Consistency” is a virtue if and only if you are following design principles, or style guidelines. “Consistency” describes your attitude toward good design principles and coding standards. It presumes the existence of good design principles and coding standards, and can never be used as a replacement for them. Who can count the coding crimes that have been committed in the name of “consistency?” The intent of a statement like this is to obliterate the distinction between good and bad design, and make bad design seem practical.

It isn’t.

Don’t fall for it. Repeating the same awful mess over and over again can never be good.

As the requirements put pressure on your design to change, you should change it. You should change it immediately. If the change is large, you may have to plan the change and execute it in stages, keeping the code functional and migrating it over time—but you should start immediately. In addition, you should generally complete one design change before embarking on another—at least if it’s in the same area of your application.

If you’re working in a team environment, you will need to communicate the necessity of medium or large-scale design shifts to your team. You should listen to possible objections (after all you may be missing some important information). If the change is small (meaning its scope is localized to the particular task you are working on), just do it. If the team is not generally receptive to improving the design of the code, either change the team or change teams.

You should consistently improve the design of code in the face of new requirements. You should consistently apply SOLID, DRY, YAGNI, TDD and other design and process principles to all new and existing code. You should consistently look for opportunities to refactor and increase test coverage. You should never use “consistency” as an excuse to shirk the responsibility of taking on a necessary change.

“Consistency” properly applies to two things: principles and style. You should consistently practice the best design principles and processes available for the craft of writing software. You should consistently conform to the style of code that your team endorses. If your team likes to put curly braces on newlines instead of at the end of a line, conform. If your team likes to use Hungarian notation (shudder), conform—though you should probably try to talk them out of it (ha!). If your team consistently writes functions with 4000 lines of code, rebel. Lead by example and write short, intuitively named, expectation satisfying functions.

Rationally there can be no conflict between consistency and good design. If you pretend there is then you have accepted a contradiction. Well-designed software is “well-designed” precisely because it makes the customer happy, is easily debugged, and is easily extended with new features. “Consistency,” it is argued, makes it easier for other developers to read the code. It isn’t easier to read if the code is full of poorly named variables and functions, disorganized class hierarchies, and 4000 line functions full of stale irrelevant comments. Well-designed code is easier to read, assuming a basic knowledge of OO concepts and design patterns. Well-designed code has a logical organization and leads the developer through the task of understanding it.

I came across this post on Marlon Grech’s blog. Marlon has written a very simple wrapper for registering and unregistering INotifyPropertyChanged event handlers. I modified his solution slightly, doing caching the results of the PropertyInfo resolution instead of recalculating it every time in the PropertyChangedSubscriber.

Here is my version of the PropertyChangedSubscriber.

/// <summary>

/// Shortcut to subscribe to PropertyChanged on an INotfiyPropertyChanged and executes an action when that happens

/// </summary>

/// <typeparam name="TSource">Must implement INotifyPropertyChanged</typeparam>

/// <typeparam name="TProperty">Can be any type</typeparam>

public class PropertyChangedSubscriber<TSource, TProperty>

: IDisposable where TSource : class, INotifyPropertyChanged

{

private readonly PropertyInfo _propertyInfo;

private readonly TSource _source;

private Action<TSource> _onChange;

public PropertyChangedSubscriber(TSource source, Expression<Func<TSource, TProperty>> property)

{

var propertyInfo = ((MemberExpression)property.Body).Member as PropertyInfo;

if (propertyInfo == null)

{

throw new ArgumentException("The lambda expression 'property' should point to a valid Property");

}

_propertyInfo = propertyInfo;

_source = source;

source.PropertyChanged += SourcePropertyChanged;

}

private void SourcePropertyChanged(object sender, PropertyChangedEventArgs e)

{

if (IsTargetProperty(e.PropertyName))

{

_onChange(sender as TSource);

}

}

/// <summary>

/// Executes the action and returns an IDisposable so that you can unregister

/// </summary>

/// <param name="onChanged">The action to execute</param>

/// <returns>The IDisposable so that you can unregister</returns>

public IDisposable Do(Action<TSource> onChanged)

{

_onChange = onChanged;

return this;

}

/// <summary>

/// Executes the action only once and automatically unregisters

/// </summary>

/// <param name="onChanged">The action to be executed</param>

public void DoOnce(Action<TSource> onChanged)

{

Action<TSource> dispose = x => Dispose();

_onChange = (Action<TSource>)Delegate.Combine(onChanged, dispose);

}

private bool IsTargetProperty(string propertyName)

{

return _propertyInfo.Name == propertyName;

}

#region Implementation of IDisposable

/// <summary>

/// Unregisters the property

/// </summary>

public void Dispose()

{

_source.PropertyChanged -= SourcePropertyChanged;

}

#endregion

}

I’ve been Internet-less for a few days and it’s been killing me. Internet is like coffee—it makes the world go round!

I’ve made a few updates to Yodelay that I wanted to tell you about.

First, I added an ASP .NET MVC project. I wanted to see if I could use the MVVM pattern in ASP .NET MVC. I’m not completely happy with my implementation, and the UI is kind of rough, but it works. I’ll work on cleaning it up later.

Second, I added a library for non-attribute-based validation. My problem with validation frameworks that rely on attributes is that I don’t always have access to the code for the classes I need to create business rules for. The new library uses a fluent API to configure rules for classes and properties.

Example:

ConfigureRules.ForType<BusinessObject>() .If(e => e.Id > 0) .Property(e => e.Name) .Must.Not().BeNull();

var testObject = new BusinessObject() {Id = 1, Name = "Testing" };

Rules.Enforce(testObject);

Third, I added some extension methods for the Range class which allow the developer to test for adjacent, intersecting, and disjoint ranges. Further, the range API will now find gaps in lists of ranges.

Finally, I removed the assembly signing. When I added the key files before, I password-protected them. This makes it hard for people who download the source code to compile it. I’ve removed all assembly-signing for the short term. When I”m ready to build and installation package, I’ll resign the files without password protection.

My girlfriend Emily was recently accepted into a graduate program at Marymount University in Arlington, VA. Through the efforts of a diligent recruiter at Apex Systems I have accepted a position at USIS in the Vienna, VA area. This will be a big change for me. Not only will it mark the first time I have lived away from upstate South Carolina, it will also be the first time I’ve lived and worked in a metropolitan area the size of DC.

We have found an apartment in Annandale which is merely outrageously expensive (or in DC area terms, "cheap"). I am looking forward to attending the capitol area .NET developers group, as well as touring the homes of some of the nations’ founding fathers. Once I finish my degree, I intend to begin taking guitar lessons and finding ways to be useful to the Ayn Rand Center.

My new job will most likely focus on web development. I’m looking forward to dusting off my ASP .NET chops and giving them a much-needed upgrade. I’m also hoping to be able to do more experimentation with Silverlight and oData. My primary hobby interest at the moment is in fluent api’s. I’m getting much better at defining the API I’d like to have (yodelay has a recent example of that; see the “Require.That… api” in the Validation namespace), but I still lack the skills needed to bring the more complex API’s into implementation. Perhaps I’ll meet some devs in DC that can help me with that.

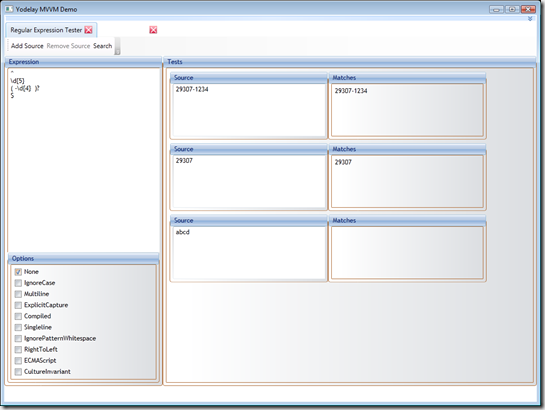

Yodelay has been updated. I’ve modified the Regular Expression tester so that it supports n-number of test contexts with a single expression. In addition, you can search regexlib.com for a useful expression.

This screen is fully implemented using MVVM. The search window serves as an example of using MVVM with dialogs.

I’ve also pulled in the themes from WpfThemes and integrated the ThemeManager.

Check it out!

I have created an open-source project on CodePlex called “Yodelay”. From the project description:

The Yodelay .NET Framework Extensions project provides a library of components that make many kinds of programming tasks simpler. These include basic MVVM components, Unit Test Extensions, and a poor-man’s Dependency Injection library.

Most of the classes and extension methods in this library are rei-mplementations or adaptations of components I have written in other places. Some of them have been found in various forms on developer blogs. Where this is true, I have indicated where I retrieved the original source code in the comments. This library is not just a collection of random classes. The attempt here is to bring all of these components together and use them in an integrated fashion to develop stable applications faster.

Not all code in this project is currently covered by unit tests. This is because many of the classes were adapted from blogs or other existing projects and may not have been developed using TDD. Every effort will be made to bring existing classes under test coverage, and to practice TDD when adding new code.

Why did I call it Yodelay? For no other reason than I like the way the word sounds. It rolls off the tongue easily, which is the effect I hope the library has on applications.

There is no installer yet. Yodelay is in version 0.1, but there are already quite a few goodies in the library. Everything is built against the 4.0 framework. I will try to work on it a little each week, adding new components and demo projects when I can. In the near future I will be signing the assemblies and building an installer for them.

Enjoy!