System.Enum is a powerful type to use in the .NET Framework. It’s best used when the list of possible states are known and are defined by the system that uses them. Enumerations are not good in any situation that requires third party extensibility. While I love enumerations, they do present some problems.

First, the code that uses them often exists in the form of switch statements that get repeated about the code base. This violation of DRY is not good.

Second, if a new value is added to the enumeration, any code that relies on the enumeration must take the new value into account. While not technically a violation of the LSP, it’s close.

Thrid, Enums are limited in what they can express. They are basically a set of named integer constants treated as a separate type. Any other data or functionality you might wish them to have has to be added on through other objects. A common requirement is to present a human-readable version of the enum field to the user, which is most often accomplished through the use of the Description Attribute. A further problem is the fact that they are often serialized as integers, which means that the textual representation has to be reproduced in whatever database, reporting tool, or other system that consumes the serialized value.

Polymorphism is always an alternative to enumerations. Polymorphism brings a problem of its own—lack of discoverability. It would be nice if we could have the power of a polymorphic type coupled with the discoverability of an enumeration.

Fortunately, we can. It’s a variation on the Flyweight pattern. I don’t think it fits the technical definition of the Flyweight pattern because it has a different purpose. Flyweight is generally employed to minimize memory usage. We’re going to use some of the mechanics of the pattern employed to a different purpose.

Consider a simple domain model with two objects: Task, and TaskState. A Task has a Name, Description, and TaskState. A TaskState has a Name, Value, and boolean method that indicates if a task can move from one state to the other. The available states are Pending, InProgress, Completed, Deferred, and Cancelled.

If we implemented this polymorphically, we’d create an abstract class or interface to represent the TaskState. Then we’d provide the various property and method implementations for each subclass. We would have to search the type system to discover which subclasses are available to represent TaskStates.

Instead, let’s create a sealed TaskState class with a defined list of static TaskState instances and a private constructor. We seal the class because our system controls the available TaskStates. We privatize the constructor because we want clients of this class to be forced to use the pre-defined static instances. Finally, we initialize the static instances in a static constructor on the class. Here’s what the code looks like:

public sealed class TaskState { public static TaskState Pending { get; private set; } public static TaskState InProgress { get; private set; } public static TaskState Completed { get; private set;} public static TaskState Deferred { get; private set; } public static TaskState Canceled { get; private set; } public string Name { get; private set; } public string Value { get; private set; } private readonly List<TaskState> _transitions = new List<TaskState>(); private TaskState AddTransition(TaskState value) { this._transitions.Add(value); return this; } public bool CanTransitionTo(TaskState value) { return this._transitions.Contains(value); } private TaskState() { } static TaskState() { BuildStates(); ConfigureTransitions(); } private static void ConfigureTransitions() { Pending.AddTransition(InProgress).AddTransition(Canceled); InProgress.AddTransition(Completed).AddTransition(Deferred).AddTransition(Canceled); Deferred.AddTransition(InProgress).AddTransition(Canceled); } private static void BuildStates() { Pending = new TaskState() { Name = "Pending", Value = "Pending", }; InProgress = new TaskState() { Name = "In Progress", Value = "InProgress", }; Completed = new TaskState() { Name = "Completed", Value = "Completed", }; Deferred = new TaskState() { Name = "Deferred", Value = "Deferred", }; Canceled = new TaskState() { Name = "Canceled", Value = "Canceled", }; } }

This pattern allows us to consume the class as if it were an enumeration:

var task = new Task() { State = TaskState.Pending, }; if (task.State.CanTransitionTo(TaskState.Completed)) { // do something }

We still have the flexibility of polymorphic instances. I’ve used this pattern several times with great effect in my software. I hope it benefits you as much as it has me.

I saw Matthew Podwysocki speak on Reactive Extensions at the most recent DC Alt .NET meeting. I’ve heard some buzz about Reactive Extensions (Rx) as Linq over Events. That sounded cool, so I put the sticky note in the back of my mind to look into it later. Matthew’s presentation blew my mind a bit. Rx provides so much functionality and is so different from traditional event programming that I thought it would be helpful for me to retrace a few of the first necessary steps that would go into creating something as powerful as Rx. To that end, I starting writing a DisposableEventObserver class.

This class has two goals at this point:

- Replace the traditional EventHandler += new EventHandler() syntax with an IDisposable syntax.

- Add conditions to the Observer that determine if it will handle the events.

This is learning code. What I mean by this is that it is doubtful that the code I’m writing here will ever be used an a production application since Rx will be far more capable than what I write here. The purpose of this code is to help me (and maybe you) to gain insight into how Rx works. There are two notable Rx features that I will not be handling in v1 of DisposableEventObserver:

- Wrangling Asynchronous Events.

- Composability.

The first test I wrote looked something like this:

- [TestFixture]

- public class DisposableEventObserverTests

- {

- public event EventHandler<EventArgs> LocalEvent;

- [Test]

- public void SingleSubscriber()

- {

- // Arrange: Setup the test context

- var count = 0;

- // Act: Perform the action under test

- using (var observer = new DisposableEventObserver<EventArgs>(this.LocalEvent,

- (sender, e) => { count += 1; })

- {

- this.LocalEvent.Invoke(null, null);

- this.LocalEvent.Invoke(null, null);

- this.LocalEvent.Invoke(null, null);

- }

- // Assert: Verify the results of the action.

- Assert.That(count, Is.EqualTo(3));

- }

The test fixture served as my eventing object. I’m passing the event handler as a lambda. There were two interesting things about this approach. The first is that type required to pass this.LocalEvent is the same delegate type as the that required to pass the handler. The second is that this code did not work.

I was a little confused as to why the test didn’t pass. The lines inside the using block blew up with a NullReferenceException when I tried to reference this.LocalEvent. This is odd because inside the Observer I was definitely adding the handler to the event delegate. What’s going on here? It turns out that although Events look for all intents and purposes like a standard delegate field of the same type, the .NET framework treats them differently. Events can only be invoked by the class that owns them. The event fields themselves cannot reliably be passed as parameters.

I backed up a bit and tried this syntax:

- [Test]

- public void SingleSubscriber()

- {

- // Arrange: Setup the test context

- var count = 0;

- EventHandler<EventArgs> evt = delegate {count += 1; };

- // Act: Perform the action under test

- using (var observer = new DisposableEventObserver<EventArgs>(this, "LocalEvent", evt))

- {

- this.LocalEvent.Invoke(null, null);

- this.LocalEvent.Invoke(null, null);

- this.LocalEvent.Invoke(null, null);

- }

- // Assert: Verify the results of the action.

- Assert.That(count, Is.EqualTo(3));

- }

This test implies an implementation that uses reflection to find the event and add the handler. This worked the first time at bat, however I don’t like that magic string “LocalEvent” sitting there. I thought back to Josh Smith’s PropertyObserver and wondered if I could do something similar. Here’s a test that takes an expression that resolves to the event:

- [Test]

- public void Subscribe_Using_Lambda()

- {

- // Arrange: Setup the test context

- var count = 0;

- EventHandler<EventArgs> evt = delegate { count += 1; };

- // Act: Perform the action under test

- using (var observer = new DisposableEventObserver<EventArgs>(this, () => this.LocalEvent, evt))

- {

- this.LocalEvent.Invoke(null, null);

- this.LocalEvent.Invoke(null, null);

- this.LocalEvent.Invoke(null, null);

- }

- // Assert: Verify the results of the action.

- Assert.That(count, Is.EqualTo(3));

- }

This looks much better to me. Now I’ll get a compile-error if the event name or signature changes.

The next step is to add some conditionality to the event handling. While this class will not be Queryable like Rx, I’m going to use a similar Where() syntax to add conditions. I added the following test:

- [Test]

- public void Where_ConditionNotMet_EventShouldNotFire()

- {

- // Arrange: Setup the test context

- var count = 0;

- EventHandler<EventArgs> evt = delegate { count += 1; };

- // Act: Perform the action under test

- using (var observer = new DisposableEventObserver<EventArgs>(this,

- () => this.LocalEvent,

- evt).Where((sender, e) => e != null)

- )

- {

- this.LocalEvent.Invoke(null, null);

- this.LocalEvent.Invoke(null, null);

- this.LocalEvent.Invoke(null, null);

- }

- // Assert: Verify the results of the action.

- Assert.That(count, Is.EqualTo(0));

- }

The Where condition specifies that the event args cannot be null. In this case count should never be incremented. I had to make several changes to the internals of the Observer class to make this work. Instead of registering “evt” with the event handler I had to create an interceptor method inside the Observer to test the criteria. If the criteria are met then “evt” will be called. I implemented Where as an “Add” function over a collection of Func<object, TEventArgs, bool>.

The full implementation can be found here, and the tests and be found here.

The intro is in French, but the talk is in English. Enjoy!

USI 2010 : conférence incontournable du l’IT en France

Rendez-vous annuel des Geeks et des Boss souhaitant une informatique qui transforme nos sociétés, USI est une conférence de 2 jours sur les sujets IT : Architecture de SI, Cloud Computing, iPhone, Agile, Lean management, Java, .net… USI 2010 a rassemblé 500 personnes autour d’un programme en 4 thèmes : Innovant, Durable, Ouvert et Valeur.

Plus d’informations sur www.universite-du-si.com

When working with the ObservableCollection or INotifyCollectionChanged interface, it is common to see code like the following:

void OnCollectionChanged(object sender, NotifyCollectionChangedEventArgs e) { switch (e.Action) { case NotifyCollectionChangedAction.Add: HandleAddedItems(e); break; case NotifyCollectionChangedAction.Move: break; case NotifyCollectionChangedAction.Remove: HandleRemovedItems(e); break; case NotifyCollectionChangedAction.Replace: HandleReplacedItems(e); break; case NotifyCollectionChangedAction.Reset: HandleClearItems(e); break; } }

There’s nothing particularly wrong with this code except that it’s kind of bulky, and that it starts to crop up all over your application. There’s another problem that’s not immediately obvious. The “Reset” action only gets fired when the ObservableCollection is cleared, but it’s eventargs does not contain the items that were removed from the collection. If your logic calls for processing removed items when they’re cleared, the built-in API offers you nothing. You have to do your own shadow copy of the collection so that you can respond to the Clear() correctly.

For that reason I wrote and added ObservableCollectionHandler to manage these events for you. It accepts three kinds of delegates for responding to changes in the source collection: ItemAdded, ItemRemoved, and ItemReplaced actions. (It would be easy to add ItemMoved as well, but I have seldom had a need for that so I coded the critical path first.) The handler maintains a shadow copy of the list so that the ItemRemoved delegates are called in response to the Clear() command.

[Test] public void OnItemAdded_ShouldPerformAction() { // Arrange: Setup the test context int i = 0; var collection = new ObservableCollection<Employee>(); var handler = new ObservableCollectionHandler<Employee>(collection) .OnItemAdded(e => i++); // Act: Perform the action under test collection.Add(new Employee()); // Assert: Verify the results of the action. Require.That(i).IsEqualTo(1); }

Another common need with respect to ObservableCollections is the need to track which items were added, modified, and removed from the source collection. To facilitate this need I wrote the ChangeTracker class. ChangeTracker makes use of ObservableCollectionHandler to setup activities in response to changes in the source collection. ChangeTracker maintains a list of additions and removals from the source collection. It can also maintain a list of modified items assuming the items in the collection implement INotifyPropertyChanged.

Here is a sample unit test indicating it’s usage:

[Test] public void GetChanges_AfterAdd_ShouldReturnAddedItems() { // Arrange: Setup the test context var source = new ObservableCollection<Employee>(); var tracker = new ChangeTracker<Employee>(source); // Act: Perform the action under test var added = new Employee(); source.Add(added); // Assert: Verify the results of the action. var changes = tracker.GetChanges(ChangeType.All); Require.That(changes.Any()).IsTrue(); Require.That(tracker.HasChanges).IsTrue(); var change = changes.First(); Require.That(change).IsNotNull(); Require.That(change.Type).IsEqualTo(ChangeType.Add); Require.That(change.Value).IsTheSameAs(added); }

The full source code and unit tests can be found here.

“We have to do {Insert Questionable Practice Here} in order to be consistent with the rest of the application.”

I’m so tired of hearing this statement. When a statement such as this is uttered, it is intended to do three things: 1) brush aside the necessity for a design change, 2) give the illusion of admitting that something is wrong with the current design (or lack thereof) while at the same time 3) justifying the current design.

“Consistency” is not a design principle. “Consistency” is a virtue if and only if you are following design principles, or style guidelines. “Consistency” describes your attitude toward good design principles and coding standards. It presumes the existence of good design principles and coding standards, and can never be used as a replacement for them. Who can count the coding crimes that have been committed in the name of “consistency?” The intent of a statement like this is to obliterate the distinction between good and bad design, and make bad design seem practical.

It isn’t.

Don’t fall for it. Repeating the same awful mess over and over again can never be good.

As the requirements put pressure on your design to change, you should change it. You should change it immediately. If the change is large, you may have to plan the change and execute it in stages, keeping the code functional and migrating it over time—but you should start immediately. In addition, you should generally complete one design change before embarking on another—at least if it’s in the same area of your application.

If you’re working in a team environment, you will need to communicate the necessity of medium or large-scale design shifts to your team. You should listen to possible objections (after all you may be missing some important information). If the change is small (meaning its scope is localized to the particular task you are working on), just do it. If the team is not generally receptive to improving the design of the code, either change the team or change teams.

You should consistently improve the design of code in the face of new requirements. You should consistently apply SOLID, DRY, YAGNI, TDD and other design and process principles to all new and existing code. You should consistently look for opportunities to refactor and increase test coverage. You should never use “consistency” as an excuse to shirk the responsibility of taking on a necessary change.

“Consistency” properly applies to two things: principles and style. You should consistently practice the best design principles and processes available for the craft of writing software. You should consistently conform to the style of code that your team endorses. If your team likes to put curly braces on newlines instead of at the end of a line, conform. If your team likes to use Hungarian notation (shudder), conform—though you should probably try to talk them out of it (ha!). If your team consistently writes functions with 4000 lines of code, rebel. Lead by example and write short, intuitively named, expectation satisfying functions.

Rationally there can be no conflict between consistency and good design. If you pretend there is then you have accepted a contradiction. Well-designed software is “well-designed” precisely because it makes the customer happy, is easily debugged, and is easily extended with new features. “Consistency,” it is argued, makes it easier for other developers to read the code. It isn’t easier to read if the code is full of poorly named variables and functions, disorganized class hierarchies, and 4000 line functions full of stale irrelevant comments. Well-designed code is easier to read, assuming a basic knowledge of OO concepts and design patterns. Well-designed code has a logical organization and leads the developer through the task of understanding it.

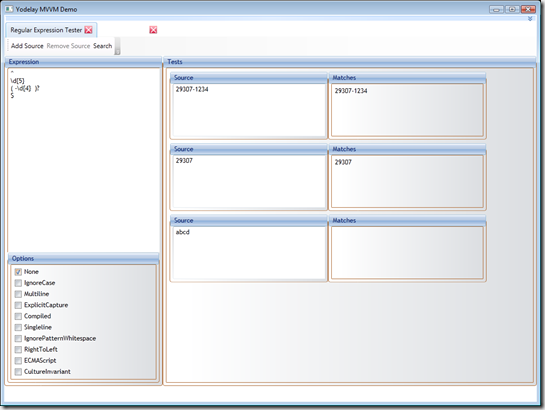

Yodelay has been updated. I’ve modified the Regular Expression tester so that it supports n-number of test contexts with a single expression. In addition, you can search regexlib.com for a useful expression.

This screen is fully implemented using MVVM. The search window serves as an example of using MVVM with dialogs.

I’ve also pulled in the themes from WpfThemes and integrated the ThemeManager.

Check it out!

Writing a function is an act of design. You must choose the placement (both physical and logical), signature, and name of the function carefully. Most people understand vaguely that functions should have minimal scope, but I’d like to draw attention to a couple of design implications of public functions that I haven’t heard discussed much. These features are "permission" and “precedent.” Simply by existing, a function indicates that it is okay to call it, and establishes a precedent for the choices that led you to create it with the characteristics you chose.

Permission

When would you not want to publicly expose a function? Or what are the reasons you might wish to refuse calling permission to a function?

Here is a typical example: Suppose you have a WidgetListEditor class that manages a list of widgets bound to a list editing control on a screen. Suppose that part of the class’s task is to sort the list of widgets prior to binding to the grid. The sorting of the list is ancillary to the primary task of managing the binding to the grid. Clients of the code should not instantiate an instance of WidgetListEditor to sort the contents of some arbitrary list of widgets elsewhere in the system. For this reason, the sorting methods of WidgetListEditor are internal concerns, and should not be exposed publicly. If the sorting methods are needed externally to WidgetListEditor, they should be extracted into first-class components of their own and provided to WidgetListEditor via some form of Dependency Injection.

Why would it be wrong to simply allow the clients of the code to instantiate WidgetListEditor at will and use the sorting method? It exposes the rest of the code to changes in WidgetListEditor. WidgetListEditor could be modified such that it loads an expensive resource when constructed. The maintainers of WidgetListEditor have no reason to believe that adding the expensive resource should cause any problems because as far as they know, this class is only instantiated once on a couple of screens. Suddenly the system is brought to a crawl because it is in fact being used all over the place as a means of “reusing” the sorting code. This is a problem of responsibility.

Or:

WidgetListEditor needs to be changed to allow arbitrary sorting by the user. This requires that the sorting methods be changed to accept a sorting criteria–but that change is made more difficult because the methods are being consumed application-wide instead of on the one or two screens the class was built for.

Or:

WidgetListEditor no longer needs to presort the contents of the list before binding, so the developer wishes to remove the sorting code–only s/he can’t because the methods are being consumed application-wide instead of on the one or two screens the class was built for.

There’s a recurring theme here: What was the class built-for? What is its exposure to change? A public method is a kind of contract with the rest of the code. Changes to public methods must be considered with greater care because of the potential effects with the rest of the code-base. When we make a method private, we reduce the potential of damage to the system if the method needs to change, or be removed altogether. This is the design advantage of encapsulation. For this reason, every function in the system (and every class in a library) needs to have the least significant scope it is possible to give it.

There is another kind of permissions problem related to public methods that is harder to pinpoint. Sometimes a method is created that does a narrow-purpose task that is needed within the system, but has the potential to corrupt the system. Posit a financial system in which every transaction is recorded as a series of debit-credit pairs. For the most part the system does not allow direct editing of entries. Instead it functions through a series of transaction templates. You submit a transaction, and the template tells it which accounts to debit, to credit, and how much, etc.. It is discovered that a rounding error creates a small reporting variance and your manager assigns you the task of creating a manual correction screen.

Since this is a transactional system, the right way to solve this problem is to create an AdjustmentTransactionTemplate and enter it into the system, but that would take a week, and you only have a day. So, you take a short-cut. You create a new method in the data access layer that lets you simply update the entry directly. You reason that since this is the only code that calls this method, it’s safe because you will be sure to update both the debit and credit together, and with the same amount. You do this, turn in the work, and everybody is happy.

What’s the problem here? There is now a method in the data access layer that allows you to manually update a specific entry without reference to a transaction template. It’s existence implies that it’s okay to use it. The methods that accept the transaction template can validate the template to verify that the transaction will balance on debits and credits. This manual entry edit method can do no such validation. This means that it is now possible to get bad data into the system which could have catastrophic consequences. At this point, it is a matter of "when, " not "if" that will happen. If you or your manager considers "it’s faster” to be a general justification for violating the design of a system, then your project will become less maintainable as time goes by. You may need to update your design to make it faster to develop against—but that’s a different problem than I’m describing here.

Precedent

Consider either of the above scenarios. What danger does the existence of either of the public functions pose to the rest of the code base? Isn’t the danger isolated to the particular components involved? What danger does the precedent pose to a code base?

In the legal system, the decision of a higher court on a relevant point is binding as legal-precedent for a lower court. Conversely, the decision of a lower-court on some legal matter is used as guidance to a higher court faced with the same decision. The reason for this is not that lawyers are crazy, but rather that the enormity of concrete circumstances possible in a legal case are too numerous for any one person or team to keep track of. When a new set of circumstances is considered by a court, it must make the best decision it can within the context of existing law and existing legal precedent. In most cases this keeps the range of legal issues organized in an intelligible manner. However, sometimes the courts make a decision that violates our sense of justice or propriety. In these cases, we can “refactor” by encouraging the legislature to explicitly change the law.

The key point is this: existing code is the best guideline for how to add new features to a code-base. You cannot expect future developers, to look at your code-base and infer the principles you built the application on if you are violating those principles yourself. Further, if you are willing to violate the design principles in one case, you will likely find it much easier to violate them again and again. This means that even though you “think” your application was developed according to a particular design, “with a couple of exceptions,” it will not be apparent to anyone else. Objectively the, the code was not designed according to any principles at all, but to your whims. To put the point another way, intent is communicated not by what’s in our heads, but what’s in our code.

If you use WidgetListEditor for sorting some place else in your code, other developers will think that that’s what you intended. If you bypass the transaction template logic in the financial system, other developers will think it’s safe to do that as well. The question of whether code is designed well is more than just a question of whether it works. It’s a question of whether the intent of the design is consistently expressed by the code that implements it.

Conventions often function as a substitute for failure to grasp design principles. For example, I’ve seen teams get up in arms about using Hungarian Notation but then write meaningless function names like “DoIt()” or “Process()”, or meaningless variable names like “intVar” or “intX”. These same teams often have little or no understanding of design principles , patterns, or concepts. I have met so many developers that have never heard of YAGNI, LRM, SRP, LSP, Dependency Injection. How many developers do you know that have heard of patterns such as Singleton, Adapter, Decorator, Factory, Monostate, Proxy, Composite, or Command?

The use of conventions is often invoked as a means of aiding code clarity. And it does, but it is not the only or even the best means of attaining that goal. We need to learn to name classes, functions, and variables in meaningful ways; to write small functions that do one tiny little thing, and do it well; to write small single-purpose classes. All of the conventions in the world will not help you maintain a code-base if developers are free to write 3000-line methods with 27 layers of nested-conditionals and loops (and yes, I have seen this). Read “Clean Code” by Uncle Bob.

Design patterns, techniques, and processes are much harder to learn and master than a concrete list of do’s and don’ts (which is what most conventions amount to). Good software design is like playing a musical instrument–it requires dedication, repetition, and practice to learn and master.

Conventions do have a place. However, conventions are usually suggested by the provider of the tools you are using. For example, Microsoft has a conventions document for the .NET Framework which is applicable to all .NET Languages. (Thankfully, they eschew Hungarian Notation). It is true that this is worth learning simply in order to be a good developer citizen. My recommendation would be to simply use the conventions established by the tool-provider, or the dominant conventions within the community around the tool. Don’t waste company time and money adding your own tweaks and changes to the conventions document. Speaking personally, I would much rather maintain a code-base that is written cleanly and with good design and that violates every convention you could name.

Conventions are not a bad thing. The problem with conventions is that they are so often discussed in place of design principles. Conventions without design principles aid nothing. Without proper focus on good design, conventions can even hurt software quality because they give developers and managers the illusion of being thoughtful and disciplined about what they are doing.

Conventions can be useful in another way. There is a good bit of discussion going on right now about Convention over Configuration. CoC is a design short-cut, and consists of making decisions about how components of a software system interact by default, and only adding additional documentation when components deviate from the norm. CoC actually bolsters my point here, because it only tends to arise as a discussion point in systems that are already using good design patterns and practices.

There are four basic engineering aspects to developing a software system: Requirements, Test, Design, and Implementation. When a project is squeezed for time, it is the Design and Test aspects that get squeezed to make room for Implementation. Design activities are in effect frozen, which means that the design of the system will stay static even as new features are added. Testing simply stops, with all that that implies.

As implementation continues, new requirements are uncovered or areas of existing requirements are discovered to need modification or clarification. All of these factors imply necessary changes to the design of the system, but since design is frozen, the necessary changes don’t happen. Since testing isn’t happening, the full ramifications of this fact are not immediately felt.

The only remaining implementation choice is to squeeze square pegs of new features into the round holes of the existing design. This option requires more time to implement because time is spent creating overhead to deal with the dissonance between the design and the requirements. The resulting code is harder to understand because of the extra overhead. This option is more prone to error because more code exists than should be necessary to accomplish the task. Every additional line of code is a new opportunity for a bug.

All of these factors results in code that is harder to maintain over time. New features are difficult to implement because they will require the same kind of glue-code to marry a poor design to the new requirements. Further, each new feature deepens the dependency of the system on the poor design, making it harder to correct, and making it ever-easier to continue throwing bad code after bad. When considered in isolation, it will always seem cheaper to just add crap-code to get the new feature in rather than correct the design, but cumulatively, it costs much more. Eventually it will kill the project.

As bad as all this is, the problems don’t stop there. As long as the ill-designed code continues to exist in the system it serves to undermine the existing and all future features in two ways. 1) It becomes a pain point around which ever more glue-code will have to be written as the interaction of the ill-designed feature with the rest of the system changes. 2) it acts as a precedent in the code-base, demonstrating that low-quality code is acceptable so long as the developer can find a reason to rationalize it.